“Nothin’ happened the way it was supposed to happen.” –Neville, “I Am Legend,” Warner Bros, 2007

Thinking about AI over the past two years, it reminded us of post-9/11. Before the horrific disaster, we had a few security experts/personnel; going through the airport was a snap. Six months after 9/11, we had a horde of security experts and personnel. In no time at all, we had security folks everywhere—at the airports, checking people in/out of buildings and folks getting big bucks to advise other folks how to harden their security systems and efforts.

(Source: Ryan McGuire and Pixabay)

AI has been around since the early 1950s when Alan Turing developed the benchmark for machine intelligence (Turing test). Then someone coined the phrase “artificial intelligence” to solve problems using computational resources. It wallowed around for years and then , all hell broke loose. Now, AI is a big deal, and it’s only going to get bigger.

Everyone is pimping it… everyone is buying it. It’s so hot that Meta’s Mark Zuckerberg left his two yachts—somewhere—to come back to his headquarters to offer $1 million signing bonuses for as many key experts as he could persuade to join his team. Well, yeah, he was calling folks at OpenAI, Anthropic and other firms to make the offer, but hey, it’s just business!

Now, every product you buy is made using AI and has the technology embedded in it, even if no one can tell you what it really does for you. So, when we try to wrap our head around a technology or product category, one of the first places we turn to is Gartner Research and, more specifically, their hype cycle, to understand where it’s at and, more importantly, where it’s going. In other words, it came on so fast with so many over-the-moon promises that it’s already in the technology’s trough of disillusionment, where people get a small dose of reality.

No, it’s not going to disappear, but AI adoption and productivity is going to take time… a long time.

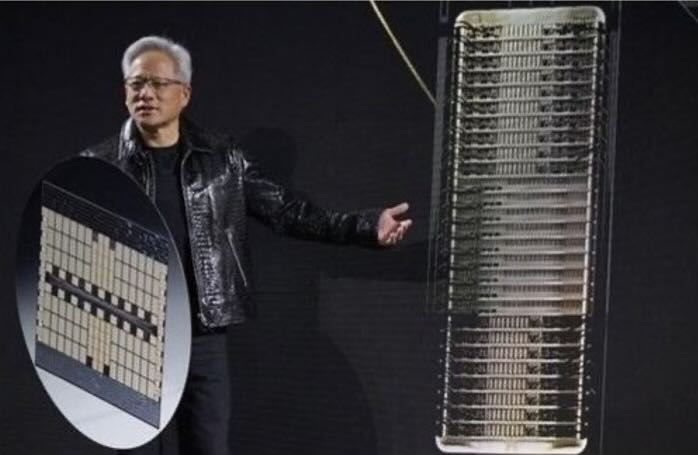

(Source: Nvidia)

Even the industry’s No. 1 cheerleader, Nvidia’s CEO Jensen Huang, has shifted his message slightly.

The first change we noticed at the company’s last GTC (GPU Technology Conference) is that he shed his signature smooth black biker’s jacket for a lizard-embossed black jacket, but I digress. While his firm is still heavily invested in the AI industry, the message had changed to the next big thing… quantum computing. Yes, AI is still the path to the future, but it will require a whole new class of systems, technologies, and tools to get there and solve real-world problems that, according to Huang, Nvidia is ready to deliver.

Of course, it doesn’t hurt that his company is the leading chip provider for AI and quantum computing, which is one of the reasons the company recently (and briefly) was valued at $4 trillion, with loftier targets in sight.

Nvidia isn’t alone in advancing AI, because AMD, headed by Lisa Su, is aggressively

leveraging and enhancing their CPU, GPU technologies. But longtime chip leader Intel decided to back away from the heavy investments required and focus on lower-performance chips… maybe.

Their boss realized what I Am Legend’s Neville meant when he said Sam, “You can’t go running into the dark.” It’s all a matter of risk/reward/risk. Even Huang is telling folks that full-blown superhuman intelligence isn’t imminent and that there are a lot of obstacles that have to be solved and overcome before it’s here.

(Source: Apke and Pixabay)

One of the first we see is that it requires a lots of computing power to deliver and use AI in all of the ways start-ups and application folks around the globe are working on. All of that AI, cloud and hyperscale work requires computer power – massive amounts of computing power—and that means a massive amount of data-center development… everywhere. The global data-center construction market surpassed an estimated $241 billion last year and is projected to reach nearly $257 billion by 2030.

Companies like Microsoft, Amazon, Google, Meta, Equinix, Digital Realty and NTT are heavily investing in new facilities, but they’re far from alone, as global data-center capacity is projected to increase an average of 15% annually for the next few years. Sure, the Americas lead the build-out activity, but the EU and APAC – particularly China, India, Singapore – are rapidly building new facilities and expanding existing data centers. Most of the new construction is being located in coastal areas because data centers require massive/economic access to water sources for immersive cooling because of the heat energy they generate. The big question is, who gets power first… your home and office or AI?

And speaking of generation, data centers require vast amounts of power to process the high-density workloads. The rapid increase of computing power is estimated to require 114 GW this year, which is a fivefold increase over 2005. Industry experts project that the power requirements will more than double by 2030. To deliver the power, folks in Washington DC said, let’s discard the sustainability rules and not worry about such fake things as global warming and make the most of our natural resources… coal and petroleum. We know there are those that think the move might becounterproductive in light of the fact that almost every corner of the globe has experienced record heat levels, violent storms including tornados, hurricanes and floods this year.

In addition, the global ocean temperatures have reached record highs, and an interesting sidebar – the ocean stores 90% of the Earth’s excess heat. Of course, they also require lots of water to cool those facilities … lots of water.

The folks in Newton County, Georgia, were delighted when Meta built some of his data centers in their neighborhood – until they found out his operation totally mucked up their water supply and increased their water rates. He forgot to tell them AI data centers require millions of gallons of water… every day.

Meta’s response to the “inconvenience,” and in most areas where new data centers are built, is: We got ours; you solve your problem.

Fortunately, many of the data-center owners and countries around the globe have been focused on a cleaner, less damaging power source. China, for example, is the world’s leader in wind-power-generation capacity, exceeding 520 GW this year and is projected to generate more than 1,200 GB by 2030. Most of the EU countries are advancing similar clean energy capacity. Solar- and wave-generated power facilities are also being built around the globe.

Of course, there’s that other major power-generation source that is being pressed back into action… nuclear. The rapid expansion of data centers and AI has driven everyone to reconsider nuclear energy to meet the crushing power demand. Facilities like the one at Three Mile Island (TMI) near Harrisburg, Pennsylvania, are being refurbished and enhanced to quickly and economically get major generation facilities back in operation as new ones break ground. The industry is promoting Small Modular Reactors (SMRs) that are a great option because they are scalable, incorporate new advanced safety features, and multiple safety features on top of safety features, and they are carbon-neutral power sources.

After all, what’s the worst that can happen? Okay, so there were accidents at TMI, Chernobyl, and Fukushima, and there was some release of radioactive material and contamination, but folks learned from their mistakes. Despite these minor issues, AI and all of its derivations are being churned out as quickly as possible with mind-boggling promises. Companies and individuals are glomming onto as many of them as they can and as quickly as they can, lest they get left behind. People are snapping up and implementing chatbots and virtual assistants in record numbers because, well, who doesn’t want someone/something to talk to that will agree /support them and assist them. Admit it, intelligent search and personalized recommendation engines/tools are fantastic, and predictive analytics is fantastic because if something goes awry, you’ve got something to blame.

(Source: Matheus Bertelli and Pexels)

The ability to use natural language processing and automated data entry can relieve your team and you from really dull and time-consuming tasks and make you look really smart when projects are done accurately, on time and are so perfect they can’t possibly be challenged. Fraud detection and robust AI security tools can protect your company and you from devastating/embarrassing losses. All you have to remember is that bad folks have the same – if not better tools – that are designed specifically to kick the crap out of your protection and fool your systems and you.

Oh sure, the new tools and systems are being churned out so quickly and with grandiose promises that even responsible AI CEOs and experts are urging governments around the world to set up strict guidelines and rules surrounding the development, testing, validating and governing the technology’s usage. The big problem is that requires intelligent people in government to establish the rules of engagement, and we don’t know about where you live, but here it’s a decided… duuhhh!

To ensure individuals and companies have an edge over others and lower the time/cost of doing things, AI tools/systems are being pressed into action without sufficient understanding or concern about the outcome. Gen Zers have quickly embraced AI tools to bypass the agonizing task of learning the fundamentals to pass and surpass the educational system. The legal community has already uncovered a range of screwups where court briefings were riddled with errors because if the technology doesn’t have the right information, it will simply do what any lawyer would do… make stuff up. But do it better.

Deepfakes, really good deepfakes, are flooding social media. Very real-looking events, activities and individuals are being generated, modified and posted to spread stupid, embarrassing and inflammatory stuff that people blindly take as fact because… they saw it. A major issue with the way AI systems and tools are being used is that they can answer questions with known answers/solutions.

Abstract questions require more than linear “thinking” and exploration of all of the available data to arrive at a concrete solution that sometimes defies standards and convention. Often it is even an error in the process that delivers the answer. Penicillin was discovered when a petri dish was accidentally left uncovered and the mold that grew was found to be the life-saving drug the researchers were looking for. X-ray was discovered when the scientist saw a fluorescent screen would glow when placed near a cathode ray tube, the subject of the initial work. A 3M scientist was working to develop a strong adhesive but instead invented a weak, reusable adhesive to stick notes to almost any surface. Charles Goodyear was trying to make rubber more durable when he dropped a mixture of rubber, sulfur and lead on a hot stove and the result was a stable, elastic material.

Breakthroughs and ah-ha solutions don’t follow a straight line of examining all of the “facts” and arriving at an answer. AI tools and solutions can automate tasks for you, but they don’t have long-term memory, and to give you the answer again, they have to go through the complete process again… and every time.

AI doesn’t think – yet – so things like reasoning, deep thought, understanding don’t mean anything to the tools. How do you describe the three abstract ideas algorithmically? AI can’t provide answers based on personal insights, experience, contrary/paradigm-challenging insights or counterintuitive thoughts.

A survey report by Gallagher found that the highest perceived risks in using AI for business were due to errors and AI hallucinations (34%), increased threat of privacy violations and data breaches (33%), and liability or legal accountability in the misuse of AI (31%). Other concerns include greater vulnerability to cyberattacks and fraud, reduced employee engagement/morale, reputational risks in misuse of AI technologies, and more.

As Ron White, a founding member of the Blue Collar Comedy Tour, has gone down in history for observing, “You can’t fix stupid.” AI solutions and answers will always come back to human input. If the inputs are clear, concise, then AI can do the work and develop the results/assistance quickly and neatly. If the inputs/guidelines aren’t clear/concise, then the work/solution is bland, messy, probably sorta right as the technology takes the “safe” route to an answer. AI doesn’t challenge the norm or provide contrary, paradigm-challenging ideas. With the present stage of the technology, it will deliver the safe, sanitary answers based on its interpretation and analysis of the individual’s inputs. AI can’t replace your messy thoughts and experiences to deliver something clean, concise and admittedly sterile.

Don’t expect AI to holler at you as Neville did in I Am Legend and scream, “I can help. I can save you. I can save everybody.”

It will take years – if ever – for AI to figure out what the question, need is to deliver the answer/solution before it’s been asked for it. You, we got into this mess, and for the foreseeable future, we have to figure out how to solve it so AI can deliver.

You’ve got a messy, organized/disorganized mind. Use it first and constantly, and we may figure out how we got into this mess.

LIKE WHAT YOU’RE READING? INTRODUCE US TO YOUR FRIENDS AND COLLEAGUES.