Lightcraft has a recent history of rethinking virtual production. In 2024, the company developed Jetset, a mobile app for accessing virtual production tools that span all stages of production. Then, the company began working on an AI solution for the creation of photorealistic 3D sets. Called Spark, the new platform, which comprises four tools, provides a single, collaborative platform for creators to do everything—from preproduction to post—all from within a single, unified toolset.

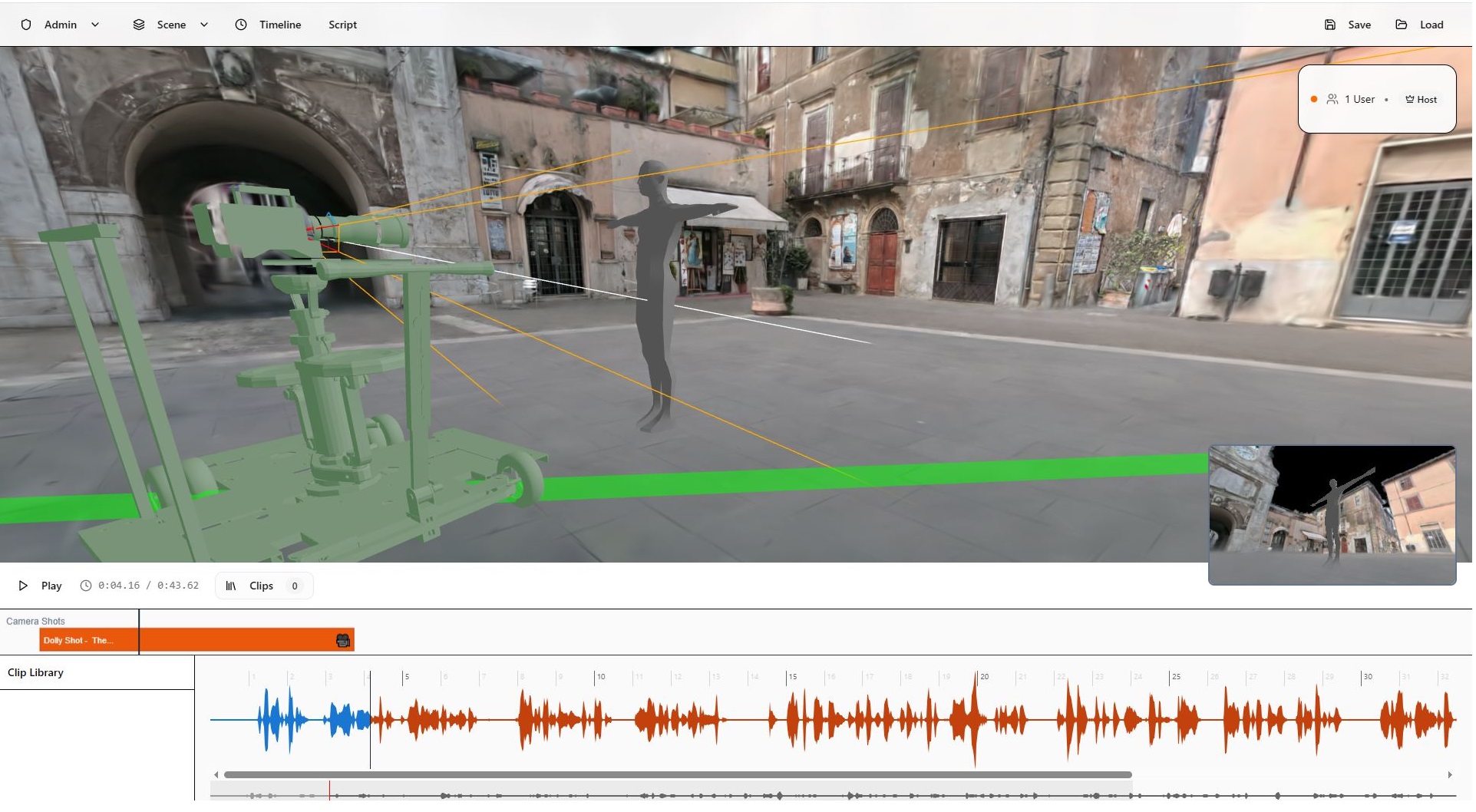

Lightcraft’s Spark is an all-in-one browser-based mobile app toolset for virtual production. (Source: Lightcraft)

Lightcraft Technology has been on a roll with its mission to streamline and simplify the filmmaking process. Two decades ago, engineer-turned-filmmaker Eliot Mack had founded Lightcraft with goal of solving VFX problems he had been experiencing, along with many others. That’s when Previzion—a real-time virtual production system—was born. Mack, a big thinker, then began thinking smaller, and came up with Jetset in 2024, a mobile app for accessing, via an iPhone or iPad, virtual production tools spanning every stage in the production process. Since then, the Lightcraft CEO and his team have continued revising Jetset, and this past spring were working on an AI aspect to facilitate creation of photorealistic 3D sets.

This past summer, the company introduced the results of that effort: Spark, a new platform that combines traditional filmmaker tools (such as Maya, Blender, and Unreal Engine 5) with AI, providing a collaborative platform within a single, unified tool. Spark can connect scripts, offer virtual looks at movies, build environments, and more.

“For the first time, one tool combines the human power of filmmakers with the massive technological changes that are occurring now,” said Mack.

Spark builds on some of the same underlying technologies as Jetset and Autoshot (which connects Jetset with traditional DCC tools), including USD scenes, Gaussian splats, real-time databases, Web-based rendering, and automated metadata capture and processing. Spark is designed to handle both the preproduction/front end and postproduction/back end of projects using Jetset, according to the company.

“Spark’s origins came from watching the rapidly scaling nature of the Jetset projects that were happening. It became very clear that the single-operator model basis of the traditional Jetset workflow needed to transition to a fast, fluid, collaborative model that could organize the efforts of an artistic team spread out around the world,” says Mack.

From a spark to the Spark system

Spark is a browser-based, real-time collaborative platform for visual storytelling that uses the most recent Web technologies. Spark’s overall design is deeply integrated with traditional filmmaking tools throughout the scope of a project, the company notes. Furthermore, all of the data in the system, including the script, USD scenes, and Gaussian splats, can be interactively visualized and adjusted by the members of the team who are logged in on their browsers.

Mack says it’s important to unify AI, 3D, and traditional filmmaking, as the technologies behind AI and 3D virtual production are uniquely complementary. Generative AI can create extreme levels of photorealistic detail very quickly but is difficult to control in a traditional production sense.

“3D virtual production provides a very rapid and precise way to block out a 3D environment, but bringing that 3D environment to photorealism using traditional methods can require a lengthy and expensive process,” he says. “Combining these two technologies is a natural fit and can lead to a system that is both creatively directable, while producing high-quality visual results at a low cost. By unifying the visual storytelling process under a single software ‘roof,’ it is also possible to dramatically reduce the amount of tedious file and data management that otherwise dominate filmmaking. Spark’s goal is to automate the non-creative elements of telling visual stories, so the artists can focus on the actual storytelling project, instead of chasing thumb drives and file folders.”

Lightcraft’s Eliot Mack testing the new technology. (Source: Lightcraft)

With an expected release in mid-2026, Spark brings artists together at every stage of production “with a Figma- or Google Docs-like interactive approach,” says Mack. The hub, which he describes as “a creative nervous system for productions,” enables teams to harness the power of AI, 3D, and traditional filmmaking live in the browser, enabling users to start creating shots on Day One.

The Spark hub comprises four tools:

Spark Shot—An interactive scene assembly tool that combines scans, USD 3D models, animation, audio, AI tools, and realistic camera simulations to revolutionize the process of conceiving shots.

The 3D shot creation tool can combine Gaussian splats and production-level layered USD files, so that multiple people and teams can work together live, bringing together all the elements that would be required on a production. For example: A location team can import an xGrids Gaussian splat of a location, and the camera team can load in realistic cameras, dollies, and cranes. The VFX team can add in digital set extensions, while the stunt team adds digital rigging, all with real-world accurate scales and dimensions. It also includes instant visual feedback, all without corrupting one another’s work.

Spark Live—An instant communications tool that links artists and actions. This integrated chat and voice communication system provides “context-aware” communication, so that when users are collaboratively working on a specific shot, the notes and audio are automatically associated with that shot context. Spark Live can also keep track of individual users’ 3D viewpoints during a discussion, making it more straightforward to communicate in a visual sense about solving storytelling problems, Mack points out.

Spark Atlas—An artist-centric database for handling the storage and coordination needs of an entire production.

Among Spark’s technical breakthroughs, Mack emphasizes, is its central core, the Spark Atlas database system, which is unique in that it is completely engineered around screenplays. Spark Atlas can import screenplays, break them down into their core elements of scene, character, action, and dialog, and then store those pieces in the database. It can also link every other element of the project to specific components of the script.

Spark Atlas also can import audio from a table read, parse it, and automatically link it to the script. The user can then easily choose a few lines of dialog and create a simulated camera shot in the 3D Spark Shot environment, with precise simulations of everything from character and camera movement to on-set props—all with the actual actor’s audio dialog. These simulated shots can then be exported and edited together just like real footage, says Mack, so that a creative team can easily pre-screen their entire project to discern whether it works on the screen.

The creative decisions made in this early “story-hacking” phase can then translate precisely to actual shoot planning and virtual production with Jetset, Lightcraft’s iOS-based virtual production app, with centimeter-accurate blueprints for shot setups, the company says.

Spark Forge—An automated postproduction system that organizes and automates post elements into a simplified, organized timeline, with a significant speedup due to the extensive on-set production metadata captured by Jetset. Spark Forge imports OpenTimelineIO timelines from all major NLE systems and automates the creation of tracked 3D VFX shots, replacing traditional “ingest” departments and making it possible for a single operator to batch-process hundreds of “slap comps” in a day, says Lightcraft.

Moreover, Spark Forge implements a visual timeline approach to keeping track of visual shot evolutions throughout the postproduction process, making it easy for the entire team to see what the current state of each shot is in context with the rest of the project.

From concept to post

At the concept phase, Spark takes in various kinds of data: screenplays, 3D Gaussian splats, 3D animated USD scenes, and audio of the script dialog. Spark automatically matches the audio to the screenplay dialog and loads the 3D scene. The user can pick out lines of dialog, add moving 3D characters, and create simulated camera shots with realistic camera support that automatically tracks the characters. These simulated shots can then be used to start editing a project for story development very early in the process, explains Mack.

Lightcraft’s Eliot Mack. (Source: Lightcraft)

The same 3D scenes that are developed during this phase can be directly exported to Jetset. Jetset can connect directly to a cine camera and track its precise motion, including optical calibration of cine lenses. Jetset records complete production metadata, including lens calibrations, camera tracks, and matched 3D scans of the physical scene.

Jetset also can link to Spark and upload the production metadata directly to Spark’s online database system. This captures all of the production metadata in one central location, enabling the editorial team to work with original camera footage or live on-set composites. Once the edit is complete, the editorial team can add timeline markers to name all the VFX shots, and export an OpenTimelineIO (OTIO) timeline file that describes all of the individual clip edits and their associated VFX shot names.

Spark Forge then imports these OTIO timeline files and automatically creates named VFX shots based on these timelines, including 3D tracked cameras, ACEScg-formatted EXR image sequences pulled from the camera original footage, and 3D shot files linked to the top-level 3D scene files for each specific sequence.

These VFX shots are created with industry-standard directory and file-naming conventions (which can be edited) to easily link to existing postproduction pipeline tools where needed. According to Mack, Spark Forge sets up the tools to automatically render correctly named EXR frames to specific directories, and then automatically uploads and converts the EXRs into their own visual timeline. That lets teams easily review the visual progress of the whole project within the browser and make notes for updates.

“For some 3D DCC tools such as Blender, Spark will also be able to store the various iterations of the 3D scene and shot files, along with the shot’s EXR sequence files, providing a one-stop production tracking and asset management approach to postproduction,” says Mack.

Mack says Spark is well-suited for new types of projects that haven’t been possible before and for small teams of what he calls “full-stack creators” as opposed to traditional creative silos—those with overlapping skill sets that enable them to understand the shape of the overall production.

“The characteristics of these projects is that they can take off very quickly from an idea into a fast-moving and rapidly scaling production,” he says.

Spark will ship in multiple versions offering various levels of capabilities and pricing; each version will include all four tools. Since it is browser-based, Spark will work on both Mac and Windows platforms. It leverages the user’s GPU for its graphics processing, so it will benefit from powerful desktop machines, although Lightcraft says it will run fine on a MacBook Air.

LIKE WHAT YOU’RE READING? INTRODUCE US TO YOUR FRIENDS AND COLLEAGUES.