AMD’s Instinct MI430X targets large AI and HPC workloads with a next-gen CDNA GPU, 432 GB HBM4, and 19.6 TB/s bandwidth, addressing memory-bound training and simulations. AMD cites deployments spanning Discovery at Oak Ridge (with Epyc Venice on HPE Cray GX5000) and Europe’s Alice Recoque (on BullSequana XH3500). Frontier and El Capitan illustrate prior Instinct use with MI250X and MI300A. MI430X extends that path with FP4/FP8 through FP64 and ROCm integration, emphasizing very large models where capacity and bandwidth reduce parallelism overhead.

AMD has presented its Instinct MI430X GPU as a data center accelerator for large-scale AI and HPC workloads. It is based on the company’s next-generation CDNA architecture and integrates 432 GB of HBM4 with 19.6 TB/s of memory bandwidth to support both low-precision AI and double-precision scientific computing. The design aims to address memory- and bandwidth-bound workloads, including large language model training and complex simulations.

AMD Instinct GPUs can be found in the Frontier supercomputer at Oak Ridge National Laboratory, which is listed on the Top500 as an exascale-class system delivering 1.3 EFLOPS for large-scale simulation, AI, and data-intensive workloads. El Capitan at Lawrence Livermore National Laboratory is reported at 1.8 EFLOPS and is deployed for national security and other large-scale simulation use cases. In the commercial domain, ENI’s HPC6 system is described as a large enterprise supercomputer with 478 PFLOPS targeted at energy-focused high-performance computing.

AMD says the MI430X GPUs are planned components of several large AI and HPC deployments. Discovery at Oak Ridge National Laboratory is characterized as an AI factory architecture that combines AMD Instinct MI430X GPUs with next-generation AMD Epyc Venice CPUs on an HPE Cray GX5000 platform to support training, fine-tuning, and deployment of large models for domains such as energy, materials, and generative AI. In Europe, the Alice Recoque system integrates MI430X GPUs and Epyc Venice CPUs on Eviden’s BullSequana XH3500 platform for mixed AI and FP64 HPC workloads under strict energy constraints.

These systems follow earlier AMD Instinct generations. MI250X GPUs in Frontier use a dual-GPU package connected to CPUs over coherent Infinity Fabric for tightly coupled CPU–GPU memory. MI300A in El Capitan combines CPU and GPU in a single APU package to unify memory and programming. MI430X continues this line by providing FP64 capability, large HBM4 capacity, and AI-oriented formats such as FP4 and FP8, together with ROCm software integration for common frameworks across large GPU clusters.

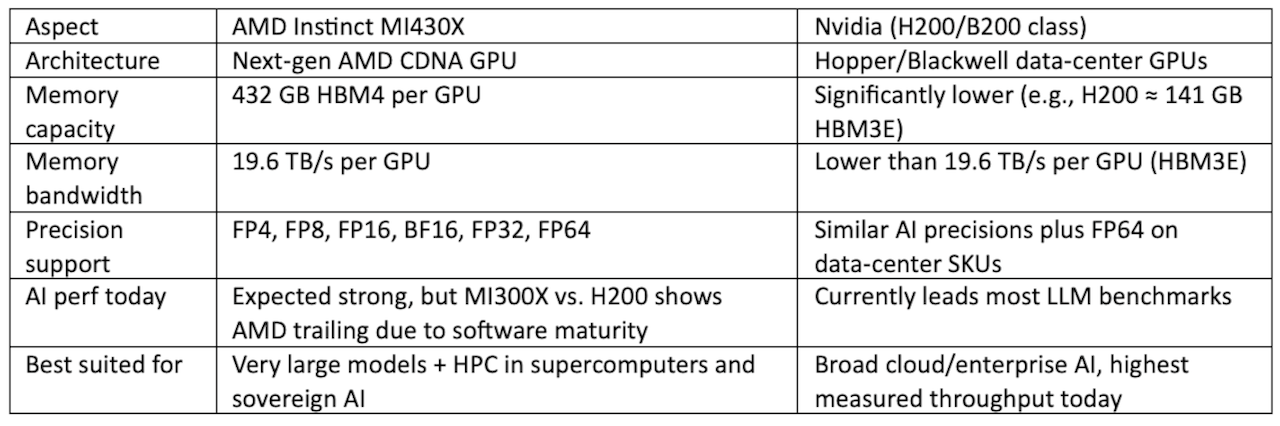

Following is a high‑level comparison table:

Table 1. Comparison AMD MI430X to Nvidia H200.

For LLM training specifically, Nvidia’s H200 still has an edge today in end‑to‑end throughput and maturity, while an MI430X‑class GPU should narrow the gap and can win on very large models that are memory‑bound rather than pure compute‑bound. The clearest data so far comes from MI300X vs. H200; MI430X builds on that lineage with more memory and bandwidth but does not yet have public, apples‑to‑apples MLPerf training numbers against H200.

On the hardware side: MI430X should allow much larger models or longer‑context variants on a single GPU or fewer‑GPU configuration, reducing or eliminating tensor/model parallelism and communication overhead; this tends to help very large LLM training and fine‑tuning where H200 must shard aggressively. H200, with less memory, compensates through very high FP8/FP16 compute and a mature scaling stack across many GPUs.

We’re closely monitoring the AI processor market and have a comprehensive database of the 139 companies currently offering some type of AI processor. It includes descriptions of each company, SWOT analyses, market forecasts through 2025, and investments in private companies, all in a 396-page report with 255 figures and 158 tables. We’d love to send you a summary or a detailed overview. Send a note to Jon at [email protected] if you’d like to learn more.

LIKE WHAT YOU’RE READING? TELL YOUR FRIENDS; WE DO THIS EVERY DAY, ALL DAY.