Holography pioneer and auto head-up display (HUD) developer Envisics showed off their advanced solution at CES 2019. I missed that demo, but the company invited me to their Detroit office to discuss the technology. What I saw was clearly the best auto HUD I have seen to date.

Envisics is headed by Dr. Jamieson Christmas, an innovator in the development and supply of holographic technologies for the automotive industry. Beginning at Cambridge University, Dr. Christmas has spent many years researching and developing phase-only holographic technology for image generation in automotive HUDs. In 2010, Dr. Christmas co-founded Two Trees Photonics to continue that work. By 2014, this technology was developed, qualified and placed in an automobile. Think about that – 4 years from prototype to production in a car. That’s incredibly fast. The customer was Jaguar Land Rover (JLR) and this first-generation HUD is now in 7 different JLR models.

Following his successful exit from Two Trees Photonics, Dr. Christmas now leads Envisics as its CEO. Christmas says Envisics are funded by a private family investment arm. “They have been very supportive of our efforts,” said Dr. Christmas. “I am in touch with them every week but they let us manage development, providing support as needed. It is a great relationship as they are focused on maximizing the success of Envisics over the long term.” The company now employs 43 people with offices in Milton-Keynes in the UK and Detroit.

So what is their technology? As I explained in a recent article, CY Vision Developing Next-Gen HUD Technology, today, almost all HUDs are image-based. An automotive HUD creates an image on a direct-view or microdisplay and then “projects” the virtual image to a fixed focal plane, typically near the front bumper of the car.

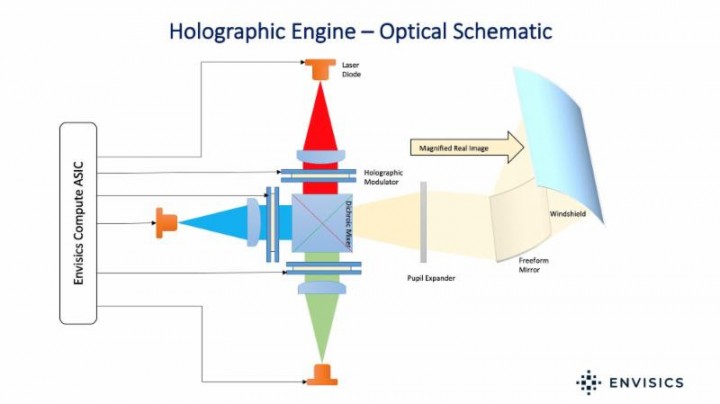

By contrast, the Envisics approach uses a custom-developed spatial light modulator (SLM), which is a modified microdisplay, designed to manipulate the phase of light. A hologram of the image you want to display is computed and the hologram pattern is driven to the SLM which is illuminated by a laser or LED.

|

The SLM approach has the advantage of allowing all of the light to be utilized in displaying content instead of blocking a lot of light as with the image-based approach. The downside can be the heavy computational load needed to calculate the phase hologram.

In our discussion, Dr. Christmas explained that you don’t want to compute the hologram by simply taking the Fourier transform of the image. That creates an amplitude hologram which is optically inefficient and has a large zero-order component – which manifests itself as a bright spot in the middle of the display.

“A phase hologram eliminates this unwanted zero-order but comes at the expense of additional computation. Traditional compute methods use a trial and error method to iteratively determine the hologram until you get a good facsimile of the image you want,” explained Dr. Christmas. “But that can’t be done in real-time. Part of my Ph.D. was about a new way to do this and is now the basis for our hologram solution. This allows us to dynamically calculate full-color high-resolution holograms at more than 180 frames per second.”

Dr. Christmas also explained that they have custom developed a silicon backplane of the LCOS for optimized phase modulation. This requires a very high birefringent liquid crystal material to enable accurate phase modulation at full motion video rates. “These improvements are really critical to optimizing system performance and efficiency,” noted Dr. Christmas.

Spatial light modulators have another significant advantage in manufacturability. The hologram will be visible even if there are many missing pixels on the phase modulator – it just becomes a little less efficient, explained Dr. Christmas. And, alignment is very simple too. From a system point of view, a phase-modulated HUD will be about ½ the size of a more conventional DLP or LCD based HUD.

With real-time dynamic hologram generation, a whole range of optical functions can be implemented. Symbology like speed, directional arrows and more is, of course, a necessary component, but one can also implement optical elements, like lenses, as well. What does that mean? It means that the focal plane of the virtual image can be moved in real-time. It also means that optical structures can be written to the phase modulator to help eliminate speckle, which was not evident in the demo. Most importantly, performance trade-off to elements like color, contrast, frame rate, bit depth and more can now be made in software rather than hardware allowing for new features or performance envelops to be demonstrated quite quickly.

The Gen 2 HUD that Envisics is showing the automotive industry implements a two-plane solution at 2.5m and 10m depth. In terms of resolution, it is much better to talk about pixels-per-degree. Envisics offers 350 pixels-per-degree for the near image plane and 170 pixels-per-degree for the far image plane. To benchmark this, 20/20 vision is roughly 60 pixels-per-degree, so we are talking very high-fidelity images. Brightness can vary from 1 nit to 25K nits. Dr. Christmas did not specify the field of view but said they can cover three highway lanes with images.

In the Envisics demo, a projector shows a driving video on a screen placed at 10 m from the HUD. In the video demo, you can very clearly see the instrument cluster indicators displayed in the near image plane and other AR-based images in the far image plane.

|

|

As the car drives along, we see directional arrows pop up along with broader illuminated patches that highlight the route. These patches move in the HUD field of view (FOV) as the car turns. There are lane-keeping lines, warning signals for a cyclist and other AR cues.

These cues appear to be mapped to the physical world, but far-field images do not look like they are placed at a specific distance from the HUD. The image generation takes advantage of longer distance 3D depth cue like geometry to fool the brain into thinking we are looking at a continuous depth space. That’s clever.

One thing that was distracting for me in the demo was some ghosting in the images. For example, one circular icon was quite ghosted when it appeared in the lower part of the field of view, but it quickly converged as it moved higher up in the FOV. The ghost image was only present because the windshield was not optimized for the large field of view generated head-up display demonstrator, it is something that could be easily addressed for the production variant.

I also thought the red colors looked a bit dim. Dr. Christmas said that others have thought it too bright suggesting some human factors issues may be at work here. For example, every person’s photopic response is different so the choice of laser wavelength will affect people differently. Plus, sensitivity to red decreases as you get older, so maybe some better human factors engineering should be considered for future work.

Overall, this was a very impressive demo and Dr. Christmas hinted that Envisics is working with a number of automotive manufacturers and suppliers to develop and deploy this technology in new vehicles and that there are even more advanced versions in development. It is usually a long time from such demos to implementation in a real car but given their previous track record, maybe we can be hopeful such technology will be here sooner than you think.