MediaTek was an early adopter of Nvidia’s DGX SuperPod for AI development, building an on‑premises AI factory that processes 60 billion inference tokens monthly and executes over 24,000 training iterations each month. The system enabled training of a 480‑billion‑parameter model in one week and integrated AI agents into research workflows, reducing documentation time from weeks to days. MediaTek leverages the platform to showcase Dimensity SoC AI capabilities, open‑source select models, and support a developer ecosystem, while Nvidia Mission Control and NeMo streamline operations and model fine‑tuning.

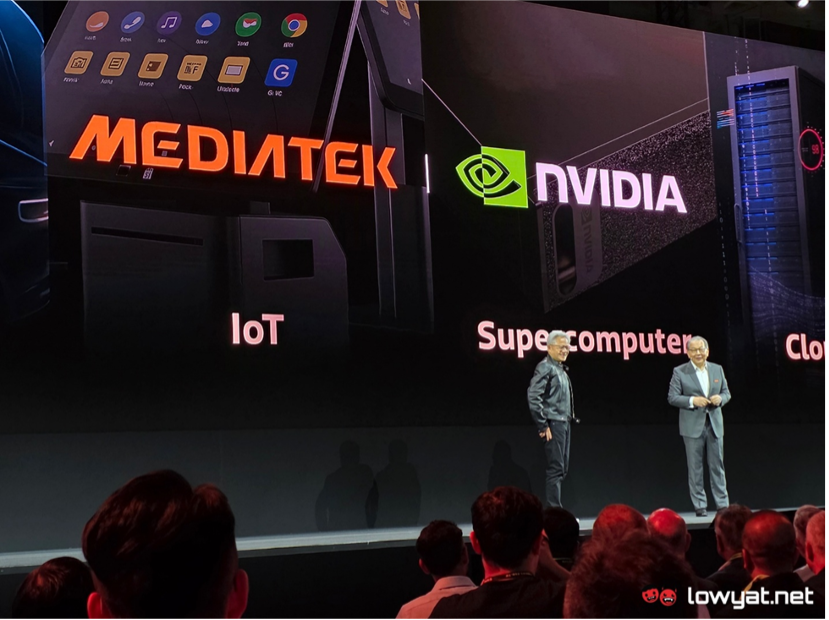

At Computex 2025 in Taipei, MediaTek outlined its artificial intelligence strategy with Nvidia. (Source: Lowyat.net)

MediaTek was among the first to adopt Nvidia DGX SuperPod for AI development. Using the system, it built an AI factory that processes 60 billion tokens monthly for inference, conducts 24,000 training iterations each month, and trains models with more than 480 billion parameters within a week. The platform also enabled MediaTek to incorporate AI agents into research workflows, reducing documentation cycles from weeks to days.

Over several years, MediaTek invested in large language model research, creating the Breeze series and a 480-billion-parameter traditional Chinese model. Some of these models have been released as open source, demonstrating the AI capabilities of MediaTek Dimensity chips that include specialized AI processors. By showcasing these capabilities, MediaTek claims it was able to grow its developer ecosystem and generate demand for its SoCs. The company ships 2 billion connected devices annually across mobile, home entertainment, connectivity, and IoT markets, and it continues to incorporate AI into its core operations to boost productivity and competitiveness.

The company ships 2 billion connected devices each year across mobile, home entertainment, connectivity, and IoT markets.

As MediaTek intensified its focus on AI, it encountered challenges in expanding its compute infrastructure. Thousands of training cycles and billions of inference tokens each month required a scalable, efficient environment. The company needed a solution that could handle current workloads and support future growth and complex models. It also needed the ability to test new models locally without risking proprietary data. Because MediaTek had experience with Nvidia’s products, it chose the DGX SuperPod with Blackwell systems. The company reports that the system provided a scalable foundation that accelerated the development and deployment of AI applications, enabling larger language models and bigger data sets within the same time frames as previous systems.

Incorporating the DGX SuperPod reference design into data-center layouts helped MediaTek manage rack density, power consumption, and thermal demands, and created an environment for data and enhancing capabilities. David Ku, CFO at MediaTek, said the AI factory processes 60 billion tokens per month for inference and completes thousands of training iterations each month. He added that inference with large language models requires loading entire models into GPU memory; models with hundreds of billions of parameters exceed the capacity of a single GPU server, requiring partitioning across multiple GPUs. The DGX SuperPod, with coupled nodes and Nvidia networking, delivers coordinated GPU memory and compute for efficient training and inference.

MediaTek has utilized large models for core research, and for a centralized high-demand API, it then creates smaller versions for edge or mobile applications. Incorporating AI agents into the product development process has made workflows more efficient; AI-assisted code completion cuts down programming time and reduces errors, and an agent built with domain-specific language models extracts information from design flowcharts and state diagrams to generate technical documentation in days instead of weeks. Nvidia NeMo can be used to fine-tune those models. Ku mentioned that with DGX SuperPod, MediaTek went from training 7-billion-parameter models in a week to training models exceeding 480 billion parameters in the same time frame, marking a significant increase in capacity.

MediaTek is the poster child of AI factories, and no one was laid off putting these systems together.

LIKE WHAT YOU SAW HERE? SHARE THE EXPERIENCE, TELL YOUR FRIENDS.