Nvidia advanced its cloud and sovereign-AI strategy via two moves. CoreWeave signed a $6.3 billion capacity pact through 2032 in which Nvidia buys unused GPU hours, securing cloud supply; CoreWeave serves OpenAI/Microsoft and inked $11.9 billion plus $4 billion deals. In the UK, Nvidia and partners will invest up to £11 billion in Blackwell Ultra AI factories, targeting 120,000 GPUs by 2026. Together these actions expand throughput and align supply with demand for customers.

Nvidia outlined two agreements that advance its cloud strategy and its participation in national AI programs. First, CoreWeave announced a $6.3 billion capacity agreement that extends through 2032. Nvidia agreed to purchase any unused CoreWeave capacity over the term, which gives CoreWeave predictable revenue and gives Nvidia priority access to GPU cloud resources in the US and Europe. CoreWeave supplies compute to OpenAI and Microsoft, and signed a five-year, $11.9 billion contract with OpenAI, followed by an additional commitment of up to $4 billion through 2029.

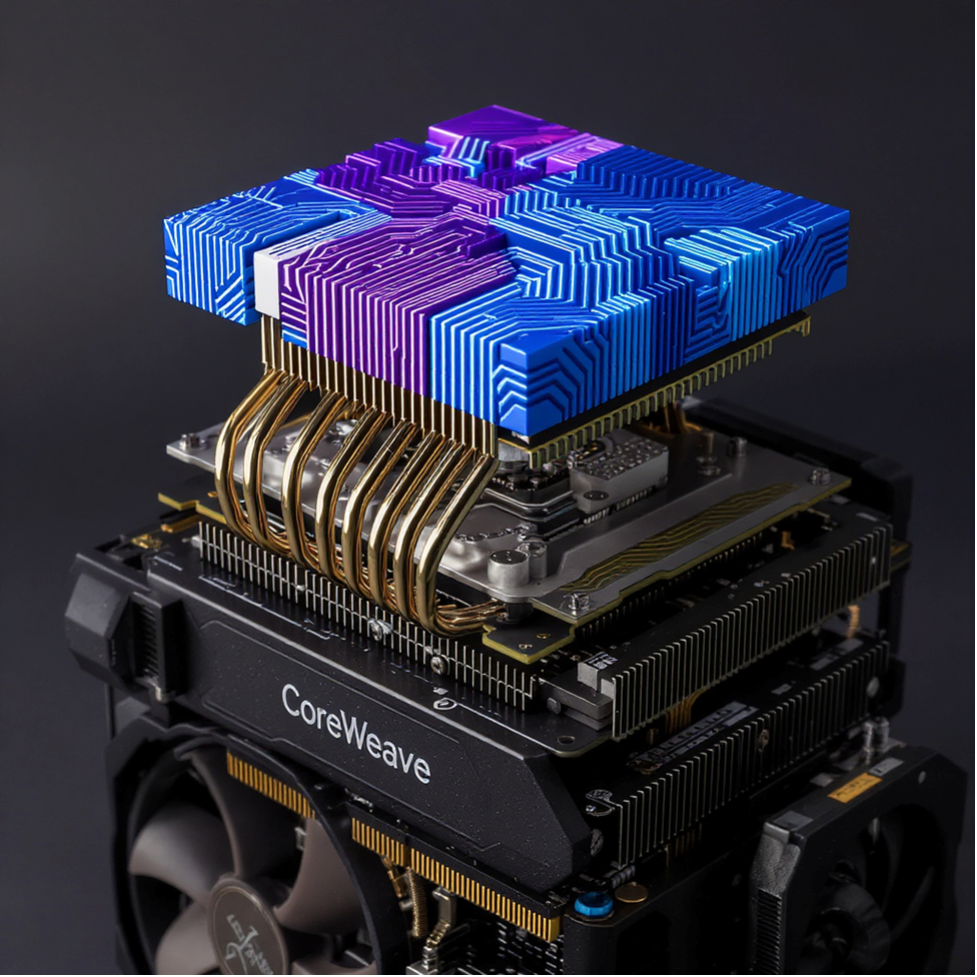

Second, Nvidia launched a UK initiative that targets large-scale AI infrastructure. Nvidia and partners plan to invest up to £11 billion (~US $14.8 billion) in AI factories built around Blackwell Ultra GPUs. The plan targets up to 120,000 GPUs operating in Britain by 2026. The effort follows a collaboration announced in June by Prime Minister Keir Starmer and Jensen Huang, and positions Britain to host compute for national language models, health research, and industrial applications. Nvidia will work with Nscale, OpenAI, Microsoft, and CoreWeave to build and operate the sites, including Stargate UK, a system intended to serve the latest OpenAI models.

The CoreWeave contract and the UK rollout address different layers of the same objective. Nvidia secures cloud capacity with a long-term purchase agreement while it builds sovereign-grade infrastructure with government and industry partners. Together, these moves increase available throughput for training and inference, and align supply with forecast demand from model providers and enterprises.

The UK program includes research collaborations that tie infrastructure to domain goals. Teams will train a bilingual large language model for English and Welsh, develop Nightingale AI for multimodal health data, run a high-resolution pollution model, and build foundation models for medical imaging and materials science. Robotics companies, including Extend Robotics and Oxa, will validate Nvidia’s platforms in manufacturing and autonomous driving. Life-sciences firms, including Isomorphic Labs and Oxford Nanopore, will use Nvidia tools for drug discovery and genomics pipelines.

Nvidia coordinates these efforts with a platform approach. In cloud deployments, it aligns GPU allocation, networking, and storage with partner service-level objectives. In sovereign programs, it standardizes data center designs, integrates power and cooling plans with GPU roadmaps, and publishes software baselines for compilers, runtimes, and orchestration. Partners then certify configurations for specific workloads, such as retrieval-augmented generation, fine-tuning, or batch-of-one inference.

Other regions plan similar sovereign AI initiatives. The UK project offers an implementation model that couples public investment, commercial operators, and shared technical standards. The CoreWeave agreement complements that approach by anchoring near-term capacity for cloud tenants. Together, these actions expand access to compute, align infrastructure with research and commercial workloads, and create predictable planning signals for governments, hyperscalers, and application developers.

LIKE WHAT YOU’RE READING? DON’T KEEP IT A SECRET, TELL YOUR FRIENDS AND FAMILY, AND PEOPLE YOU MEET IN COFFEE SHOPS.