Intel has been rethinking its role in AI. Instead of chasing Nvidia and AMD in the crowded training market, the company has shifted toward inference, edge deployment, and custom silicon, where efficiency and integration matter more than raw horsepower. Programs like CAISA and products with on-die NPUs show that focus, while rack-scale systems highlight a move toward complete solutions. Rumors of acquiring SambaNova fit this direction, since SambaNova specializes in inference systems rather than training chips. Together, these moves suggest Intel is choosing a narrower, more practical AI path.

Intel’s recent AI strategy reflects a narrowing of scope and a shift away from direct competition in high-end training accelerators. In the training market, Nvidia and AMD dominate through a combination of hardware scale, software ecosystems, and customer lock-in, leaving little room for late entrants to close the gap through a single architecture or product cycle. Intel’s leadership has acknowledged this reality and redirected resources toward inference-focused deployments and highly customized silicon, areas where architectural flexibility, power efficiency, and manufacturing integration matter more than peak training throughput.

Within this framework, edge AI has become a central focus. Inference workloads at the edge emphasize latency, energy efficiency, cost control, and system integration rather than raw compute density. Intel’s experience in CPUs, SoCs, and platform integration positions it to address these requirements. Client and embedded platforms such as Meteor Lake, Lunar Lake, and upcoming Panther Lake integrate on-die NPUs to shift inference workloads away from general-purpose cores. In industrial and embedded contexts, Intel has introduced tightly integrated edge designs that combine compute, memory, and I/O in compact packages, trading generality for deployment efficiency and predictable behavior.

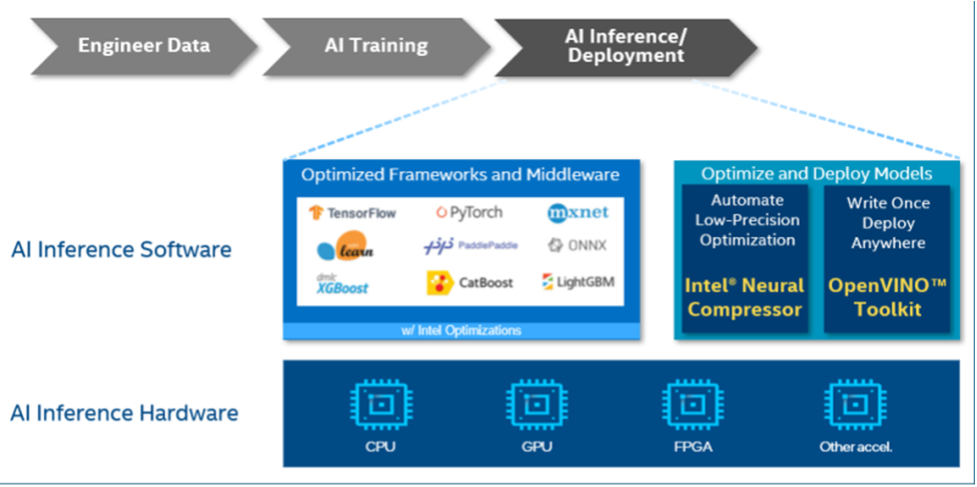

Figure 1. Inference as part of the end-to-end AI workflow. (Source: Intel)

At the silicon level, Intel’s CAISA AI inference program illustrates this direction. CAISA targets efficient inference through custom architectures that integrate compute and dataflow control rather than relying on general-purpose GPUs. Reconfigurable Dataflow Units form a core element of this approach and integrate into systems such as SambaRack, a rack-scale platform that combines hardware, networking, and software to support inference workloads in both data-center and edge environments. Intel has complemented this strategy with dedicated inference products, such as the Xeon D-1688 Inferno, which targets enterprise, industrial, and edge inference use cases where deterministic performance and power efficiency are prioritized.

Public technical documentation indicates that current CAISA-family inference chips rely on a 28 nm manufacturing process. Corerain’s CAISA 3.0 materials describe a 28 nm implementation with a peak throughput of 10.9 TOPS and confirm mass production status. Intel ecosystem references from 2025 continue to list CAISA-based platforms paired with Intel 28 nm eASIC technology in active reference designs, indicating ongoing commercial deployment. Available records place CAISA tape-out before mid-2020, with sustained production and use from 2020 through 2025 in edge stations, industrial systems, and safety-oriented applications. More recent disclosures referencing CAISA 430 variants adapted for modern distilled models still describe the same process technology, with no public evidence of a transition to advanced nodes or a change in foundry.

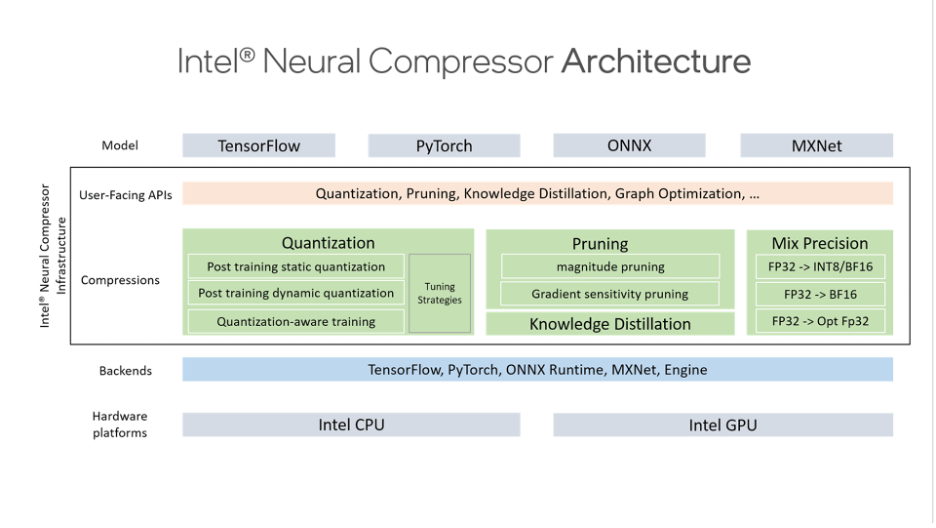

Figure 2. Intel Neural Compressor infrastructure. (Source: Intel)

Parallel to its inference focus, Intel has made custom ASIC development a structural pillar of its AI strategy. The company has established a dedicated ASIC organization reporting into central engineering, building on its existing footprint in networking and infrastructure ASICs. This approach aligns with a foundry-centric model in which Intel combines internal manufacturing, advanced packaging, and early customer co-design to deliver application-specific solutions. Within this context, products such as Gaudi retain relevance as deployment experience and as part of broader data-center engagement, but Intel’s long-term emphasis centers on inference and customized silicon rather than scaling a general-purpose training accelerator.

Or . . .

Reports indicate that Intel has held discussions about acquiring SambaNova Systems, an AI company previously valued at $5 billion, at a significantly lower price of approximately $1.6 billion. Our AI Processor report shows that SambaNova has raised about $1.1 billion in venture capital, placing the rumored acquisition price close to invested capital rather than prior peak valuation. SambaNova develops custom AI hardware focused on inference workloads, built around its Reconfigurable Dataflow Unit architecture. These processors form the basis of SambaRack, a rack-scale system that integrates compute hardware, networking, and software into a deployable platform, complemented by a cloud-based AI offering that runs on the same infrastructure.

An acquisition of SambaNova would follow Intel’s earlier attempts to establish a position in AI accelerators through acquisition. In 2019, Intel purchased Habana Labs for roughly $2 billion, gaining the Gaudi training processor line. Under Intel, Gaudi2 and Gaudi3 reached the market and delivered competitive performance and efficiency, but they failed to achieve broad adoption. Nvidia maintained dominance in AI training due to its mature CUDA software ecosystem and long-standing platform lock-in. Gaudi’s unfamiliar architecture and limited software support constrained customer uptake, and Intel’s parallel development of data-center GPUs further diluted focus. The eventual cancellation of Falcon Shores, which incorporated elements of Gaudi, marked Intel’s withdrawal from direct competition in large-scale training accelerators.

Figure 3. The SN40L RDU. (Source: SambaNova)

SambaNova operates in a different segment of the AI market. Its products target inference rather than training and emphasize system-level delivery instead of stand-alone chips. The inference market places greater weight on efficiency, latency, and integration, reducing the advantage held by GPU-centric platforms. SambaNova’s rack-scale approach allows its systems to integrate into existing data-center environments as complete inference appliances, which has supported commercial traction. In late 2024, the company announced deployments for sovereign AI inference clouds in multiple regions and secured a role in OVHcloud’s AI Endpoints service alongside GPU-based systems.

Strategically, SambaNova aligns with Intel’s recent shift toward inference, customization, and rack-scale solutions. Intel has signaled that future AI efforts will emphasize integrated systems that can be installed directly into data centers, rather than competing head-on in the training accelerator market. SambaNova already has close ties to Intel through Intel Capital’s investment and leadership connections, including Intel CEO Lip-Bu Tan serving as chairman of SambaNova.

Figure 4. SambaNova RDU modules in a rack. (Source: SambaNova)

While Intel’s history with AI acquisitions has produced mixed results, SambaNova’s focus on inference systems, its existing customer deployments, and its alignment with Intel’s current strategy distinguish it from prior efforts. An acquisition would provide Intel with an established rack-scale inference platform and accelerate its repositioning within AI infrastructure, though the timing and implications for Intel’s internal programs remain uncertain.

At the beginning of 2025, SambaNova published nine predictions about the future of AI—from power constraints and the rise of inference, to the shift toward open models and sovereign infrastructure. A few days ago, they reviewed their predictions; you can see them here.

If you’d like to learn more about SambaNova and Intel’s AI capabilities, SWOT, management, and funding, checkout our AI Processors report.

WHAT DO YOU THINK? LIKE THIS STORY? TELL YOUR FRIENDS, TELL US.