Adobe has turned up the visual volume on its Firefly generative AI technology with the introduction of the Firefly Image 3 Model, which is bringing more features, more options, more relevant text prompts, and more resolution to the GenAI image creation process.

What do we think? One thing about machine learning is that it is constantly learning. In one year’s time, Adobe has evolved its generative AI machine learning model, Firefly, with more intense training—first on its Firefly Image 1 Model, and then progressing to the Image 2 Model, and now the Image 3 Model. The results are visually astounding, enabling creatives of all levels to produce striking images using Adobe apps. Not only has Adobe revolutionized the field of content creation, but the company has been thoughtful about its steps. Alongside the technology advancements, Adobe has considered the content used to train its models (of course, that’s not such a big hurdle when you can use one of the largest stock image libraries). Still, the company is voluntarily compensating artists for the use of their images in the training, and is leading the charge to ensure that GenAI images are labeled as such.

Adobe creates a buzz with Firefly Image 3 Model

One. It wasn’t all that long ago—March 2023, in fact—that we were gobsmacked by what we had seen demonstrated by Adobe as it introduced its Firefly generative AI technology. With it, Adobe said, we could create images and text effects simply by using a detailed text description. And, voilà, we can produce an image of a phoenix, for example. Wow.

This was the beginning of Adobe’s official journey into generative AI, and for many creatives, theirs too, as the company introduced GenAI features under Firefly in beta versions of Illustrator, Express, and Photoshop, before releasing them into general use shortly thereafter. And users were flocking to it like moths to the flame to generate a plethora of images. According to Adobe, beta users had generated over 100 million assets up to that point. Adobe was on a roll, releasing beta versions of its GenAI-supported video tools within Premiere Pro and After Effects.

Two. At Adobe Max 2023 in October, the company showed us the results of its work building on its Firefly GenAI technology by announcing new Firefly Models, including the Firefly Image 2 Model, its next-generation imaging model at the time, featuring an all-new architecture that produced more precise detail and 4× higher image resolution, along with more vivid colors. That legendary bird took on an entirely new look—the difference was night and day.

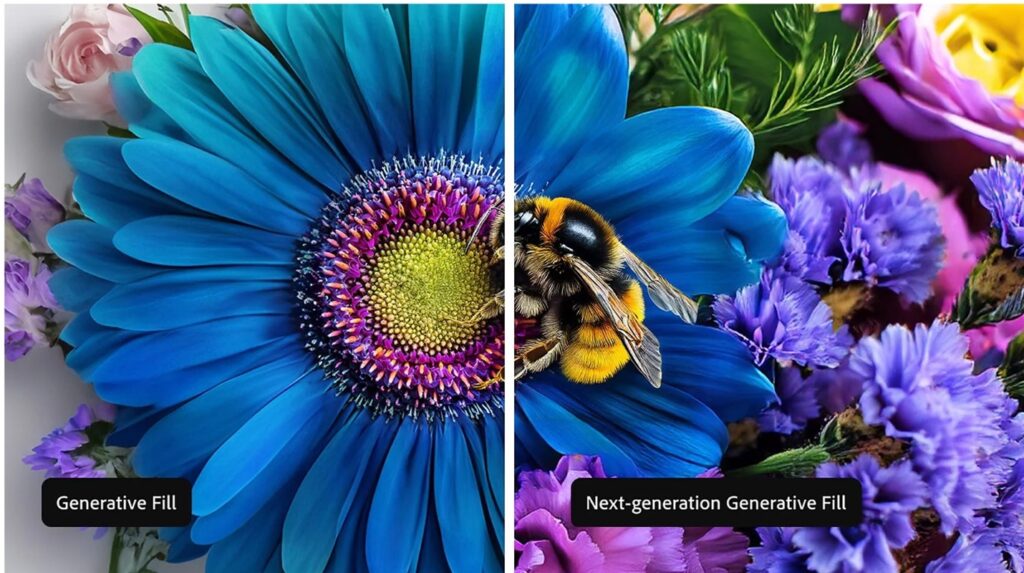

Three. Why stop there? Adobe appears to have no intention of doing so, giving users a reason for celebration, as the Firefly Image 3 Foundation Model is now available in the beta versions of the Photoshop app, Firefly Web app, and Adobe InDesign. Adobe’s next generation of generative AI in the Photoshop app includes a new Text to Image capability, along with improved Generative Fill powered by Firefly Image 3. The combination enables users to achieve higher-quality images with better composition, photorealistic details, and improved mood and lighting, thanks to more refined prompt interpretation and the ability to input more accurate text descriptions to guide image creation.

That phoenix? Truly amazing. The devil is in the details, and the image is certainly very detailed, from the feathers, to the eyes, to the scales, to its overall structure.

There’s also new GenAI features and extended controls for creating higher-quality results than could be achieved with the Firefly Image 2 Model.

Using this latest generation of Firefly, users can still select a reference image from the gallery, or now they can upload their own reference images to guide the style and structure of newly generated images, producing results that are more relevant and customizable beyond using just text prompts—especially when an image concept may be tough to describe. Creatives also have a wider range of options available to them than what had been available with Firefly Image 2 Model. They can easily to change aspect ratio, style, color, camera angle, and lighting. With more precise controls to guide the image outcomes, users are able to iterate faster and with more efficiency, Adobe points out.

With Firefly Image 3, users are able to bypass the manual editing process by easily removing and changing the background in a photo or image, and having the new scenery automatically match the lighting, shadows, and perspective of the subject. Additionally, images can be expanded beyond their original borders, as the canvas automatically fills with new content that blends seamlessly with the existing image. And, creatives can use the Generative Fill workspace and add or subtract specific elements of an image such as eyewear, facial hair, and more.

With the Enhance Detail tool, users can further increase the sharpness and detail on their output images produced using Generative Fill.

Adobe has been quick and consistent to note that its Firefly GenAI image models have been trained on licensed content including Adobe Stock and on public domain content, and the images can be used for commercial content. Generative AI credentials are automatically applied to content created using Firefly. The company also began issuing Stock Contributor Bonuses to compensate artists whose content is used to train Firefly.

Adobe has stated that it is at the beginning of this creative transformation journey and will continue to develop the Firefly model.