Arm’s vision of a “converged AI data center” takes a step forward with AWS’s launch of AWS Graviton5—the latest generation of its Arm-based server CPU. The announcement underscores how hyperscalers are using purpose-built, power-efficient Arm infrastructures to meet the demands of large-scale AI workloads.

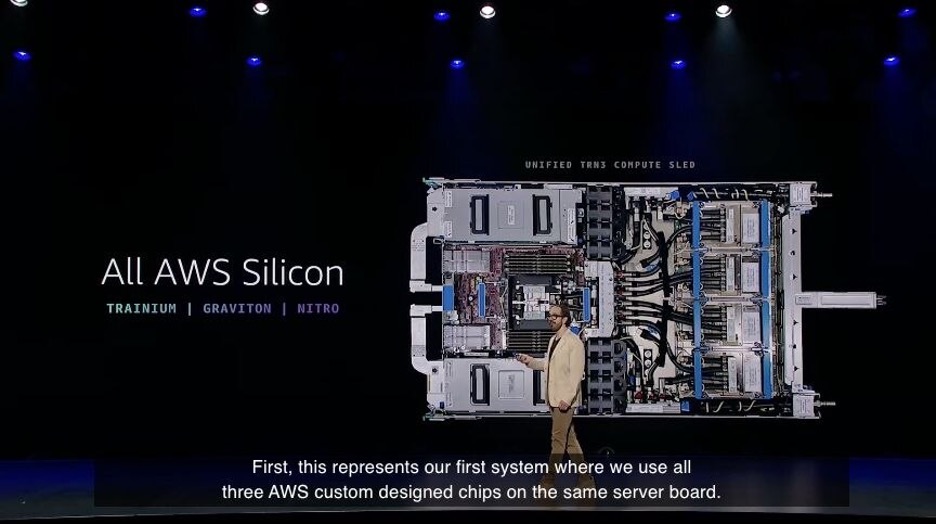

AWS SVP Peter DeSantis at re:Invent 2025 shows that Graviton is part of an all-AWS silicon strategy in which all three AWS custom-designed chips (Trainium, Graviton, and Nitro) are on the same new AWS Trainium3 compute sled. (Source: Arm)

Earlier this month at AWS re:Invent 2025, AWS unveiled Graviton5—its “most powerful and efficient” CPU to date. In doing so, AWS delivered a processor with up to 192 cores per socket, a cache up to five times larger than prior generations, and significantly improved memory subsystem and bandwidth.

According to Arm’s own blog, the arrival of Graviton5 marks a key inflection point; memory, storage, and software are no longer independent silos, they are co-designed and optimized end-to-end to support cloud-native and AI workloads at hyperscale.

Arm claims that, as of 2025, nearly half of the compute capacity shipped to major hyperscalers is Arm-based, which is on track with a forecast it made earlier this year. The implication is that the underlying architecture of high-performance cloud infrastructure is shifting from legacy x86 to purpose-built Arm.

Graviton5 is focused on AI training and inference, large‑scale data analytics, high-throughput databases, and distributed systems. By offering high core count, expanded cache, and improved memory bandwidth, Graviton5 should enable more predictable performance per dollar, an advantage when running cost‑sensitive cloud workloads at massive scale.

What do we think?

Graviton5 arrives at a moment when hyperscalers are demanding both scale and energy efficiency.

As AI workloads grow more complex and diverse, spanning training, inference, data analytics, Web services, and real‑time systems, the old approach of assembling disparate components (general‑purpose CPU, discrete accelerator, storage, network) is becoming inefficient.

With up to 192 cores and a more capable memory subsystem, Graviton5 allows AWS (and, by extension, Arm’s other cloud‑customers) to reduce reliance on x86 CPUs while still supporting dense, compute-heavy workloads. The move likely amplifies Arm’s value proposition: lower power consumption, better price/performance, and a unified architecture across cloud, edge, and now AI-focused data centers.

Longer term, this could accelerate consolidation of the AI infrastructure stack: fewer vendor lock-ins, more homogeneity, and likely a faster path to deployment of heterogeneous systems combining Arm CPUs and accelerators (GPU, NPU, custom AI chips). For enterprises embracing AI at scale, that homogeneity translates to lower operational complexity and total cost of ownership.

For Intel’s x86, the pressure is mounting: Arm is proving that its architecture is no longer just good enough for generic workloads, but can carry the heaviest AI and cloud workloads too.

IF YOU LIKED WHAT YOU READ HERE, DON’T BE STINGY, SHARE IT WITH YOUR FRIENDS.