At Computex 2025, Intel detailed its Gaudi 3 PCIe AIBs and rack-scale systems designed for AI inferencing across diverse environments. The PCIe AIBs support models from Llama 3.1 8B to Llama 4 variants, offering flexibility for businesses of various sizes. Rack-scale systems accommodate up to 64 accelerators with 8.2TB of high-bandwidth memory and liquid cooling for efficiency. Intel emphasized open, modular designs and OCP compatibility. Also announced were the AI Assistant Builder public beta and previews of Panther Lake and Arrow Lake processors, reflecting Intel’s continued collaboration with Taiwan’s tech ecosystem.

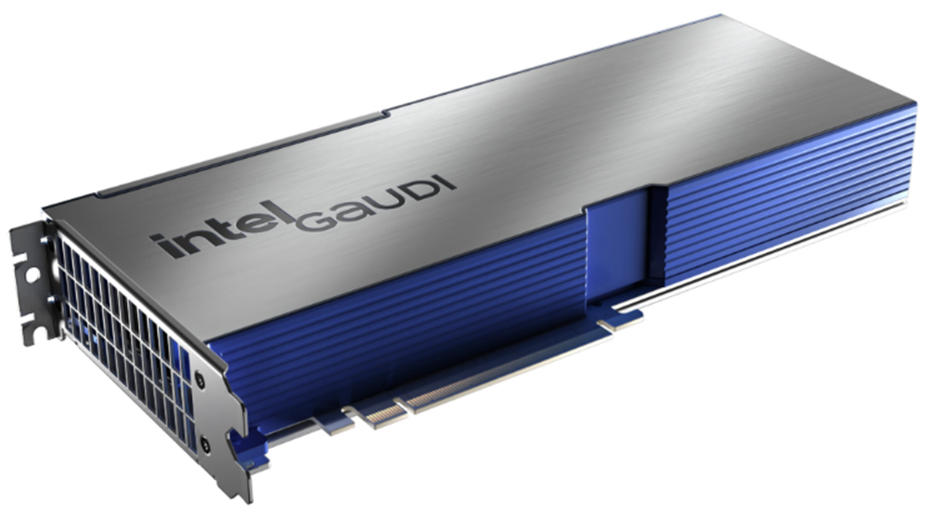

Intel’s new Gaudi AI processor. (Source: Intel)

Intel Gaudi 3 PCIe AIBs are intended to support AI inferencing workloads across a range of existing data center environments. By enabling compatibility with models such as Llama, Intel says these AIBs give organizations—whether operating at a smaller scale or across expansive enterprise settings—the means to run AI workloads that vary in size and complexity. This includes models like Llama 3.1 8B through to larger-scale iterations such as Llama 4 Scout and Llama 4 Maverick. The scalability of the PCIe AIB configurations allows for broader deployment options depending on infrastructure needs and computational objectives.

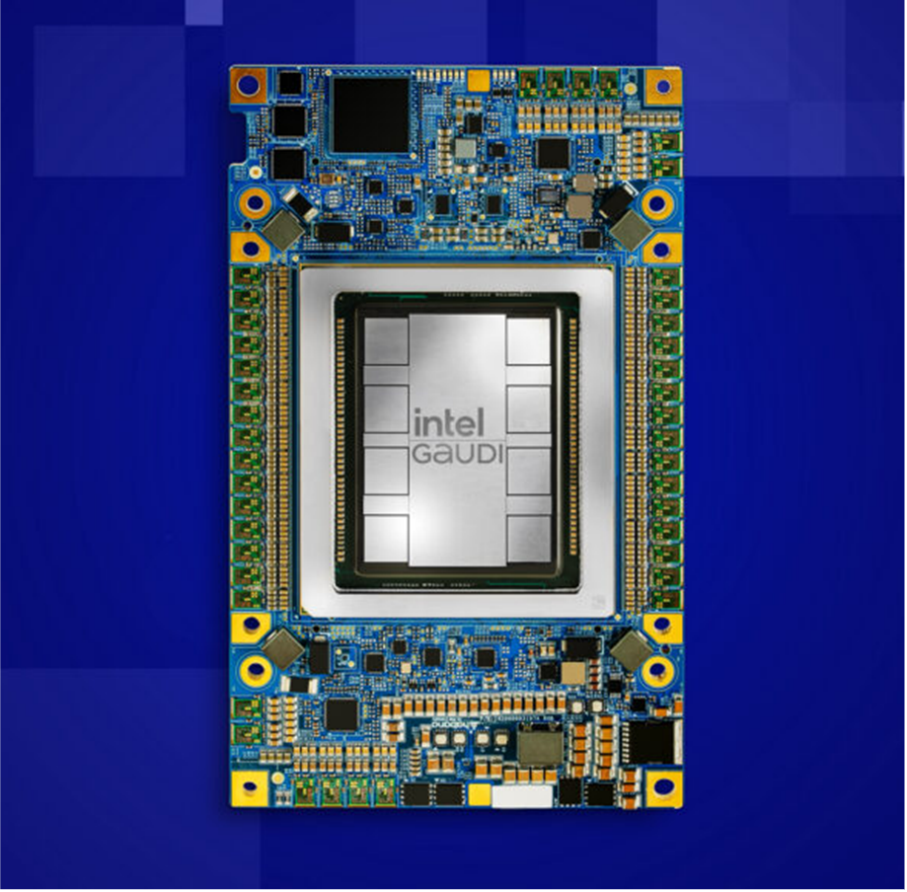

Figure 1. Intel’s Gaudi chip and subassembly. (Source: Intel)

Availability of Intel Gaudi 3 PCIe AIBs is anticipated in the second half of 2025.

For more extensive infrastructure demands, Intel has introduced rack-scale reference designs for the Gaudi 3 platform, configured to accommodate as many as 64 accelerators in a single rack.

These systems are equipped with 8.2TB of high-bandwidth memory, supporting intensive AI processing tasks. Their open and modular structure is designed to offer adaptability across deployment environments, while helping mitigate the constraints often associated with vendor-specific solutions. Integration of liquid cooling into these rack-scale systems contributes to thermal regulation and supports cost-conscious operation over time.

The Gaudi rack-scale architecture is oriented toward executing large-scale AI models and is structured to facilitate low-latency performance in real-time inferencing contexts. These system-level configurations are consistent with Intel’s broader direction toward offering adaptable and transparent AI infrastructure components. This includes compatibility with custom deployments as well as designs aligned with the Open Compute Project (OCP), offering options for cloud service providers seeking alignment with open standards.

Intel’s approach at Computex 2025 reflects its ongoing engagement with Taiwan’s technology ecosystem, which reaches back four decades. As part of its current platform expansion, Intel is introducing hardware and software technologies intended to address AI development workflows and deployment complexity across different sectors.

Debuted earlier this year at CES, the Intel AI Assistant Builder is now accessible as a public beta on GitHub. This framework supports the creation and local operation of custom AI agents optimized for Intel-based AI PCs. At Computex, Intel showcased examples from partners like Acer and Asus, demonstrating the integration of the AI Assistant Builder into upcoming systems. This offering is intended to help developers and solution providers more easily build tailored AI agents for deployment within their organizations or for delivery to clients.

Dell unveiled the Dell AI platform with Intel Gaudi 3 AI accelerators. At the core of the Dell AI platform is the PowerEdge XE9680 server optimized for AI workloads. The server’s key features include:

• Eight Intel Gaudi 3 accelerators with 128GB HBM memory and 3.7 TB/s bandwidth, perfect for large language models and computer vision.

• 5th-Gen Intel Xeon processors with up to 64 cores and PCIe Gen-5 slots, designed to handle complex computations with ease.

• Scalable storage and networking with 32 DIMM slots, 16-drive capacities, and 800 Gigabit Ethernet (GbE), ensuring smooth data flow and eliminating storage and connectivity bottlenecks.

• Energy-efficient air cooling, optimized to support large-scale AI workloads such as GenAI and machine learning.

The platform includes a pre-validated open-source software stack that supports:

• Frameworks like PyTorch and Hugging Face for easy model fine-tuning.

• Kubernetes for flexible resource scheduling and orchestration.

• Grafana and Prometheus for real-time observability and monitoring.

Additionally, the Dell Enterprise Hub offers a catalog of models optimized for Intel Gaudi 3, giving developers an expedited path to implementation.

Also at Computex, Intel showed sneak peek at Panther Lake, the company’s lead client computing processor built on the Intel 18A process node, and other members of the company’s client portfolio.

LIKE WHAT YOU’RE READING? INTRODUCE US TO YOUR FRIENDS AND COLLEAGUES.