Nvidia’s NVLink Fusion allows partners like Alchip, Astera, and Marvell to develop custom AI silicon, while Fujitsu and Qualcomm integrate custom CPUs with Nvidia GPUs. This “democratization” aims to re-architect data centers by integrating AI into every platform. NVLink Fusion enables cloud providers to scale AI factories using Nvidia’s rack-scale systems and high-speed networking. With fifth-gen NVLink offering 1.8 TB/s per GPU, Nvidia aims for NVLink to become as pervasive as CUDA, enhancing heterogeneous data centers.

Alchip Technologies, Astera Labs, Cadence, Marvell, MediaTek, and Synopsys plan to develop custom AI silicon within Nvidia NVLink ecosystem, while Fujitsu and Qualcomm plan to construct custom CPUs integrated with Nvidia GPUs, NVLink scale-up, and Spectrum-X scale. That’s a pretty good start for integrating NVLink into processorsphere.

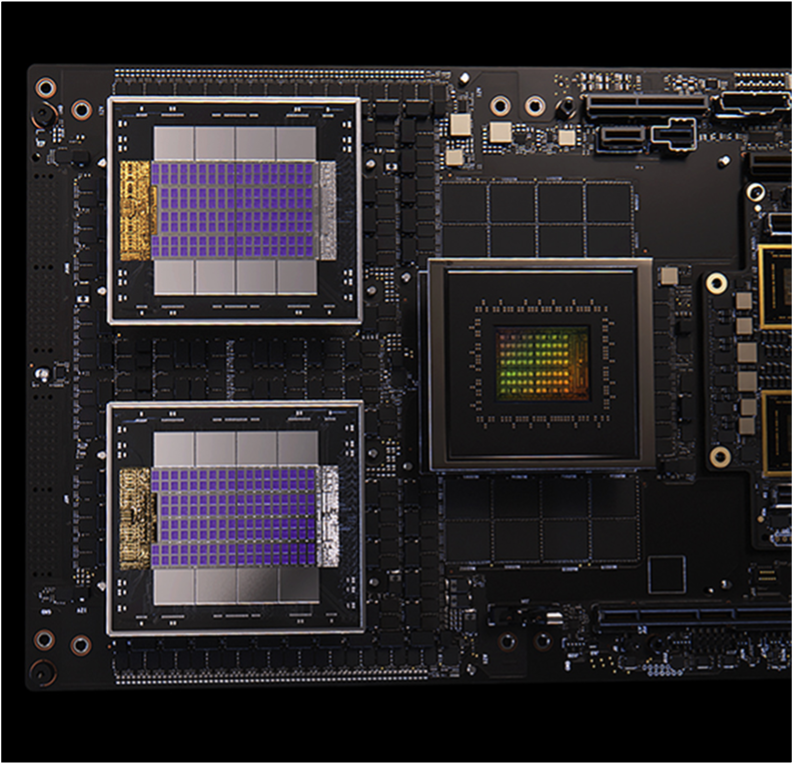

NVLink modules. (Source: Nvidia)

Nvidia calls this democratization NVLink Fusion—a new silicon component that allows industries to create semi-custom AI infrastructure by utilizing the extensive network of partners developing with Nvidia NVLink, a widely adopted computing fabric.

“A significant shift is occurring: For the first time in decades, data centers must undergo fundamental re-architecture—AI is becoming integrated into every computing platform,” stated Jensen Huang, Nvidia founder and CEO. “NVLink Fusion broadens Nvidia’s AI platform and substantial ecosystem for partners to develop specialized AI infrastructures.”

Nvidia says NVLink Fusion also provides cloud providers with a straightforward method to scale out AI factories to numerous GPUs, using any ASIC, Nvidia’s rack-scale systems, and the Nvidia end-to-end networking platform—which delivers throughput up to 800 Gb/s and features Nvidia Connect-X SuperNICs, Nvidia Spectrum-X Ethernet, and Nvidia Quantum-X800 InfiniBand switches, with co-packaged optics becoming available soon.

By using NVLink Fusion, hyperscalers can collaborate with the Nvidia partner ecosystem to integrate Nvidia rack-scale solutions for seamless deployment within data center infrastructure.

To maximize AI factory throughput and performance in a power-efficient manner, the fifth-generation Nvidia NVLink platform includes Nvidia GB200 NVL72 and GB300 NVL72, compute-dense racks that provide a total bandwidth of 1.8 TB/s per GPU—14x faster than PCIe Gen5.

Leading hyperscalers are already deploying Nvidia NVLink full-rack solutions and can speed time to availability by standardizing their heterogenous silicon data centers on the Nvidia rack architecture with NVLink Fusion.

Jensen said at Computex that although he likes to sell the whole stack of Nvidia products, he’s happy to sell just one part, too. Nvidia is on the path to make NVLink as pervasive as CUDA, which given AMD and Intel’s attempts to be the go-to processor communications solution, is a bit of a coup.

LIKE WHAT YOU’RE READING? INTRODUCE US TO YOUR FRIENDS AND COLLEAGUES.