John Simpson, SiFive’s senior principal architect, offers a guide through a fast-changing RISC-V world. He explains how the open RISC-V design lets builders craft cores exactly for AI needs, yet clever profiles like RVA23 keep everyone’s software running smoothly together. As models balloon into giant matrix math (Epoch AI’s charts made jaws drop), the community races to add smart matrix tricks without breaking portability. Simpson smiled as he explained that the trusty vector extension still powers activations and normalizations—those sneaky exponential ops that can slow everything down. He walked the audience through four exciting matrix-extension ideas brewing in the task groups, each one a different flavor for edge, cloud, or decode-heavy chatbots. In the end, he left everyone buzzing: With the right balance of freedom and common ground, RISC-V stands ready to power tomorrow’s AI, from tiny gadgets to massive data centers.

John Simpson, senior principal architect at SiFive, gave a presentation at the RISC-V Summit in October 2025, and explained how RISC-V instruction set extensions evolve to support artificial intelligence and machine learning workloads. RISC-V’s open architecture enables implementers to tailor cores for specific domains, and he emphasized that profiles such as RVA23 provide a shared baseline that preserves software portability across vendors. In his view, the ecosystem depends on this balance between customization and standardization to scale without fragmentation.

Simpson linked the urgency of new extensions to changes in AI model architecture. Data from Epoch AI show that workloads have shifted from primarily vector-oriented computation toward large-scale matrix operations. This shift increases demand for efficient matrix multiplication and broader support for reduced-precision data types. Although different application domains favor different instruction set strategies, software portability remains manageable because real-world workloads rely on a small number of dominant matrix-multiply patterns.

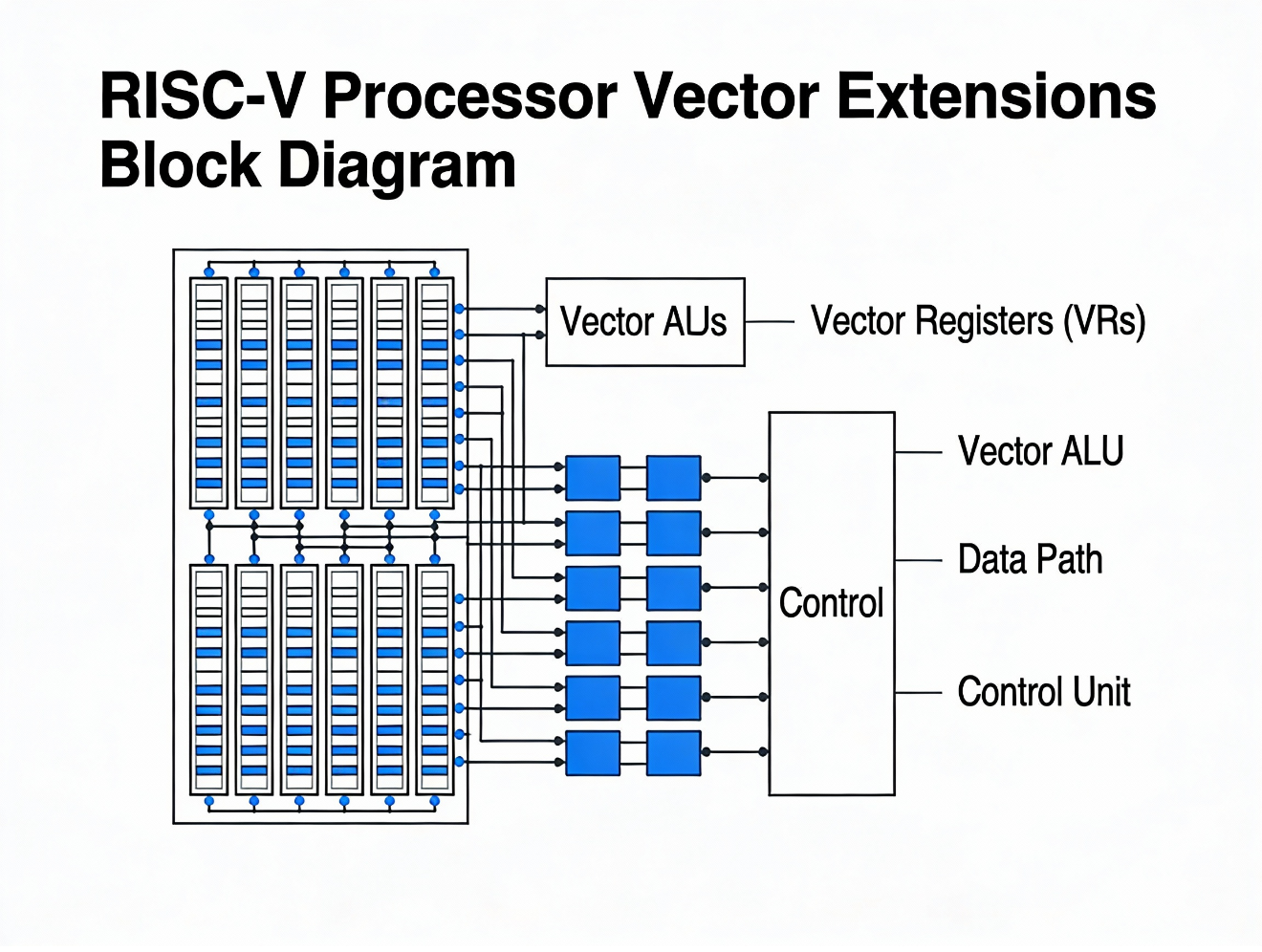

Figure 1. Block diagram of vector extensions in RISC-V

Simpson positioned the RISC-V Vector Extension (RVV) as the foundation for AI execution beyond matrix multiplication. Many AI kernels spend substantial time in activation and normalization functions such as LayerNorm, Softmax, Sigmoid, and GELU. These functions rely on exponentials, reductions, and element-wise operations, which can constrain throughput as hardware accelerates the main linear algebra. For example, prefilling a Llama-3 70B model with 1,000 tokens requires billions of exponential evaluations. RVV 1.0 already supports a wide range of integer and floating-point data types, and newer extensions extend BF16 handling through conversion and widening multiply-accumulate operations. Current proposals aim to add native BF16 arithmetic and FP8 formats through efficient encoding mechanisms, often by introducing an alternate-format bit in the vtype control register to avoid longer instructions. Further reductions in precision, such as emerging OCP MX formats, may require additional encoding space.

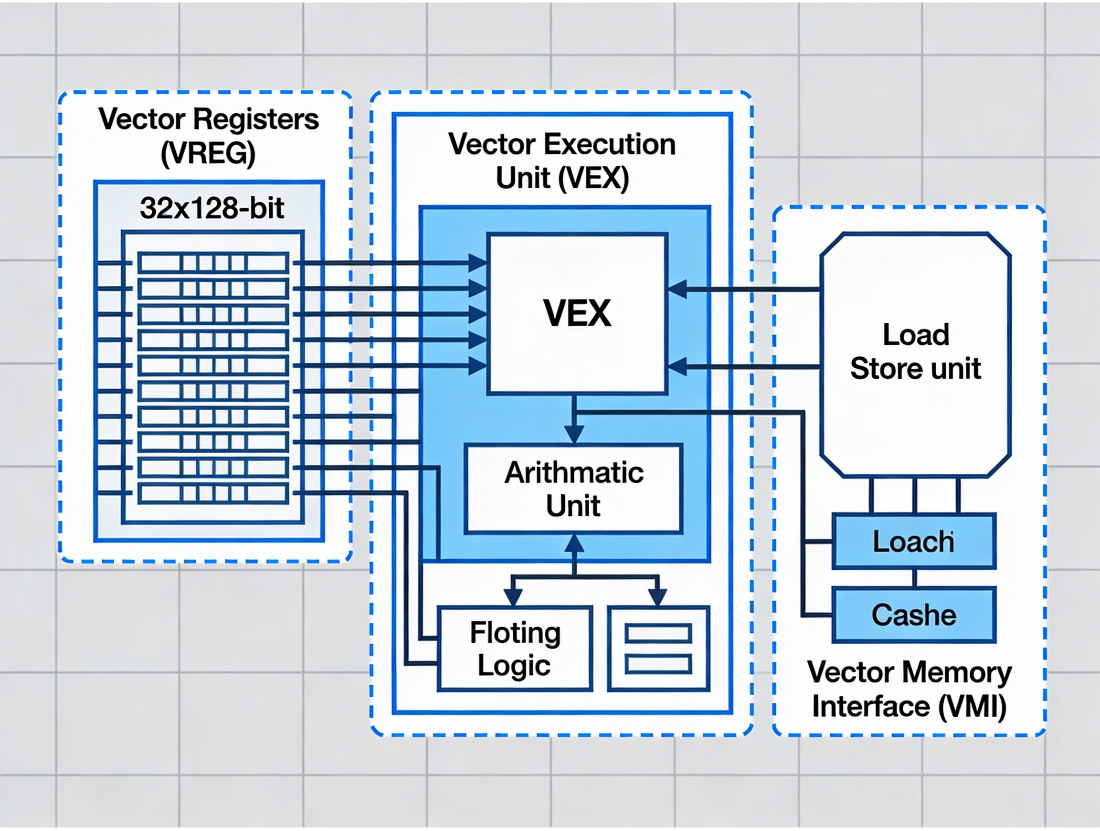

Simpson then examined several matrix extension directions under active study. One approach adds vector batch dot products while reusing existing vector registers and architectural state, targeting workloads such as GEMV that dominate large language model decode phases. Another approach treats vector registers as matrix tiles, adds minimal state, and prioritizes efficiency for area- and power-constrained designs. A third approach introduces dedicated accumulator state for matrix results while sourcing operands from vectors, increasing arithmetic intensity by keeping accumulators close to compute units. A final direction defines independent matrix state for operands and results, offering broad design flexibility at the cost of additional load and store complexity, and still lacks consensus.

The SiFive senior principal architect concluded that no single extension strategy fits all workloads. Batch size, memory bandwidth, and cache behavior strongly influence efficiency, especially for large language models. Ongoing work across these extensions aims to position RISC-V as a scalable AI platform spanning edge devices to large systems, while continued profiling and extension standardization ensure that architectural diversity does not undermine software compatibility. Combined with an NPU, they provide the basis for a dedicated AI processor and can also be implemented in a general-purpose manner for a GP CPU.

In our 2026 AI Processor Market Report, we examine several RISC-V-based AIPs.

WHAT DO YOU THINK? LIKE THIS STORY? TELL YOUR FRIENDS, TELL US.