|

In the first decade, the technology gods separated the data and the code and invented the mainframe. It was expensive to install, complicated to operate and did not have much software. Then the technology gods created an IT department to feed and care for the mainframe. Corporate management thought it was too expensive, but the IT department solved more problems than it created, so they went along with the program.

|

In the second decade, the technology gods invented the minicomputer. It was so affordable that any department could have its own system, and soon the corporate landscape was marked with many small computer islands, none of which could talk to each other or to the mainframe. Nevertheless, department managers could get solutions to problems without bothering the mainframe, and corporate management went along with the program.

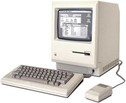

In the third decade, the technology gods invented the microprocessor, and the MPU begat the personal computer. Soon everyone had a computer on their desk. Users developed their own databases which rarely agreed with one another. Chaos

|

reigned and the IT gods were displeased, so they invented file servers to store and protect the company’s data assets, and local area networks to connect personal computers to servers. IT spending increased, but employees were more productive, so corporate management went along with the program.

In the fourth decade, the technology gods invented the Internet and the World Wide Web. Organizations needed an infrastructure to support exponentially increasing e-commerce and employee productivity requirements. Massive datacenters sprung up overnight on empty fields. The servers in these data centers could not physically accommodate all the storage capacity needed for these new users and applications, so the technology gods invented disaggregated storage systems – Network Attached Storage (NAS) and Storage Area Networks (SAN) – that allowed hundreds of storage devices to operate with dozens of servers. Then the technology gods created specialized IT departments to manage these new storage networks. The datacenter kept ahead of end-user computing requirements, so management went along with the program.

|

In the fifth decade, the technology gods invented the smartphone and third-generation (3G) wireless networks that allowed billions of users to access the internet. As applications migrated from desktop and laptop computers to smartphones, the demands on network bandwidth and computing resources grew. Those demands would wax and wane during the day, complicating the tasks of network administrators often constrained by service level (SLA) agreements. The technology gods saw this problem and created incremental resources in “the cloud” that allowed data centers to dynamically adjust the capacity to meet demand. Management saw that this approach could save money and went along with the program.

In the sixth decade, the technology gods invented faster (4G) wireless networks and streaming video services that once again taxed the existing infrastructure. But when datacenter operators tried to expand their operations, the complexity of the systems they had put in place got in their way. Their siloed implementations made their systems less responsive and harder to manage. Even simple changes often required the approval of specialists in storage, networking, and server departments. They had to spend more of their time tweaking their existing hardware and software, which left less time to address new features and applications. Nobody was happy.

At the start of the seventh decade, the technology gods saw that the system architectures they had evolved over decades had become sclerotic, unresponsive, and unmanageable. Although the calendar said they were due for a sabbatical, they deferred their rest period and used the time to design a simpler architecture that would be easier to deploy and operate. All major elements would be virtualized to simplify resource allocation and location. All physical elements – processors, networking, and storage – would reside in the same box. System capacity could be increased by using more and faster processors, more and larger storage devices, and faster networking connections. Multiple boxes, each containing processing, networking, and storage, could be combined to accommodate larger or more diverse workloads. Everything would be managed from a single command console. They summarized their conclusions in an email with the subject line “Hyper-Converged Infrastructure” that they sent to the CTOs of all the leading hardware and software suppliers.

|

And only then did the technology gods take a well-deserved rest.