Global AI infrastructure spending reached $375 billion in 2025, projected to hit $500 billion in 2026, with analysts estimating $7 trillion over the next decade. Among 108 AI processor companies, one firm holds 95% market share, with consolidation expected to drop to 25 survivors by 2030. Nvidia maintains dominance despite missing some data center growth expectations, while delivering over 1,000 racks weekly. India and China pursue indigenous GPU programs, although realistic timelines remain questionable due to the enormous development costs and technical challenges.

USB estimates companies worldwide will spend $375 billion in 2025 on AI infrastructure, rising to $500 billion in 2026. This figure only covers software and hardware; it excludes facilities, which the US Commerce Department said accounted for 25% of the past quarter’s growth. Brookfield Asset Management estimates that AI infrastructure could reach $7 trillion over the next decade.

(Source: Nvidia)

In our upcoming Q3 report on AI processors, we note that out of 108 companies offering AI processors, one company holds 95% of the hardware market share. We expect the market to consolidate to approximately 25 survivors by 2030. Among the five market segments we define (hyperscale training, cloud infrastructure, automotive, edge, and IoT), the AI processor suppliers most likely to survive are those in the IoT and edge segments because these markets have large PAMs but low margins and are not attractive to the major players.

The US is leading the industry with 50 publicly held firms and start-ups, China is pushing DeepSeek/Huawei, and India is announcing an indigenous GPU program. Clearly, hyperscalers and governments cannot, and will not, continue to expand their facilities as they have, since power and cooling are limiting factors, along with capital. However, they must stay competitive. If Nvidia, AMD, and a few others can stick to their promise of releasing a new processor every other year, and that processor offers enough improvements in features and performance, then the major players can maintain a steady state with minimal growth approaching GDP levels. But, as we’ve seen over the past decade, something new always emerges, and each time it does, forecasts get disrupted, and we start searching for the next leader.

There is enormous ROI in the market. The hyperscalers are not just wasting money; they have demanding shareholders and boards of directors. Profit expectations are being delayed by the industry’s newness. The AI provider from AWS, Google, and Microsoft are trying to perfect their product models and pricing strategies for such a fast-changing industry. It’s like the old joke about fixing an airplane while it’s flying. But make no mistake, AI is transforming everything—from basic home appliances to cars, surgery, and investment strategies.

India is planning a GPU, and some ask if that is a realistic venture for the country. India undoubtedly has the talent; the question is, does the government, which will be the ultimate funder, have the will, and can private capital be attracted to the project? The sunk costs of fielding a new, competitive GPU are enormous, and the estimates being suggested today by India are not realistic. China has poured billions into its GPU development programs and is not yet (and won’t be for several years) a competitive force.

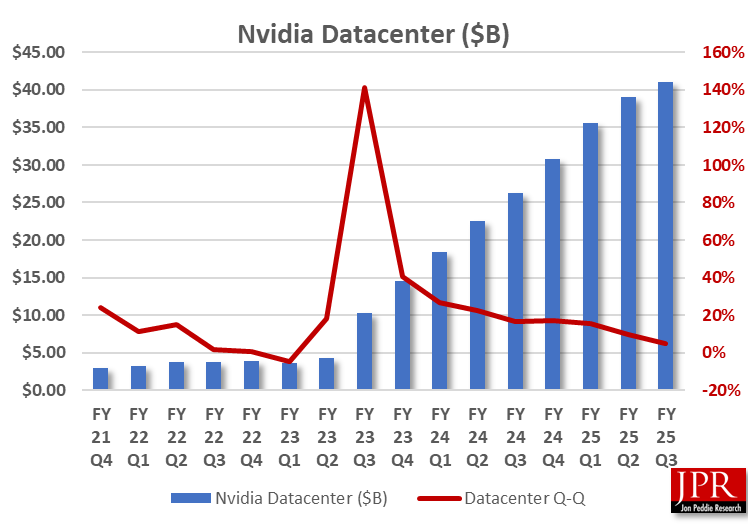

The leader in GPUs is, of course, Nvidia, which has just reported its FY26 Q2 results. Despite record sales and profits, it did not show the gains in data centers some investors hoped for. Nonetheless, CEO Jensen Huang was optimistic and forecasted $3 trillion to $4 trillion in AI infrastructure spending by 2030—and that’s not including China. The company also wonders if President Trump’s plan to take 15% of Nvidia’s China AI chip revenue could face legal challenges. As mentioned in a follow-up call, there is no signed agreement yet between Nvidia and the US government, only spoken words.

Nvidia said it didn’t sell any H20 processors to Chinese customers in the quarter, but the company did release $180 million worth of H20 inventory to a client based elsewhere.

Figure 1. Data center sales over time.

The company delivered over 1,000 racks a week last quarter. This quarter, the rate is up even more, and all Blackwell.

Huang stated that next-generation platform elements, including the Vera CPU and Rubin GPU, are currently being manufactured, with production scaling anticipated during the latter half of 2026. Initial products utilizing this hardware architecture will debut within that identical time frame.

Nvidia will continue to lead in AI processors, and no other company among the 100-plus developing AI processors seems close in terms of volume or revenue. We expect IoT and edge shipments to surpass data center volumes significantly. Still, as mentioned, they don’t have the same margins, average sales prices, or the same level of excitement generated by $10,000 to $30,000 data center GPUs.

You can learn more about the AI processor suppliers, where they are, what segments they are pursuing, and how much money has been invested in them in our quarterly report, AI Processors Market Development.

AND IF YOU LIKED WHAT YOU READ HERE, DON’T BE STINGY, SHARE IT WITH YOUR FRIENDS.