AMD recently held its Advancing AI conference, where AMD Chair and CEO Dr. Lisa Su emphasized the company’s focus in artificial intelligence. At the event, AMD launched new AI solutions, including its Instinct MI300 series data center AI accelerators—the MI300X, the MI300A APUs, and MI300X platform—as well as the updated ROCm 6 open software stack supporting LLMs and the Ryzen 8040 series mobile processors with Ryzen AI. The company also highlighted its various industry partnerships within the field of AI.

What do we think? While Nvidia no doubt has firmly planted its flag within the realm of AI, AMD is not rolling over nor ceding swaths of territory to its rival. While AI is just one area of focus for the vendor, it is an important one, and one that is growing at an unprecedented rate. It’s also clear that AMD, like Nvidia, recognizes that we are at the dawn of AI, with a long journey looming ahead, and AMD plans to continue ramping up its AI efforts.

AMD continues its AI drive with Instinct MI300 series accelerators and more

Without question, 2023 was the year of AI. Not long ago, most people were only familiar with it through movies and books, but they have been learning quickly about artificial intelligence and how to use it. From writing memos to term papers, creating graphics for social media to pieces of art, getting assistance online with gift ideas to dinner suggestions, receiving more accurate weather forecasts to more accurate medical diagnoses, optimizing code to architectural and structural design—AI has become ever present in our lives, whether we realize it/want it or not. And, we are only at the early stages of harnessing the power of this technology.

It’s hard to believe that it has only been one year since OpenAI gave us ChatGPT. “In this short amount of time, AI hasn’t just progressed, it’s actually exploded,” said Dr. Lisa Su, AMD chair and CEO. “The year has shown us that AI isn’t just a kind of cool, new thing. It’s actually the future of computing.”

With the start of a new year, AI shows no signs of slowing down in terms of development and adoption. AMD billed it as the most transformational technology in 50 years during its Advancing AI conference last month, where Su heralded the company’s plans, strategies, efforts, and growing achievements in this area. While AMD has introduced many diverse innovations and new products during the past year for a wide range of businesses and industries, “today, it’s all about AI,” she said at the AI-focused event.

To this end, AMD launched new AI solutions, including the AMD Instinct MI300 series data center AI accelerators, the ROCm 6 open software stack with new features and optimizations supporting large language models (LLMs), and Ryzen 8040 series processors with Ryzen AI.

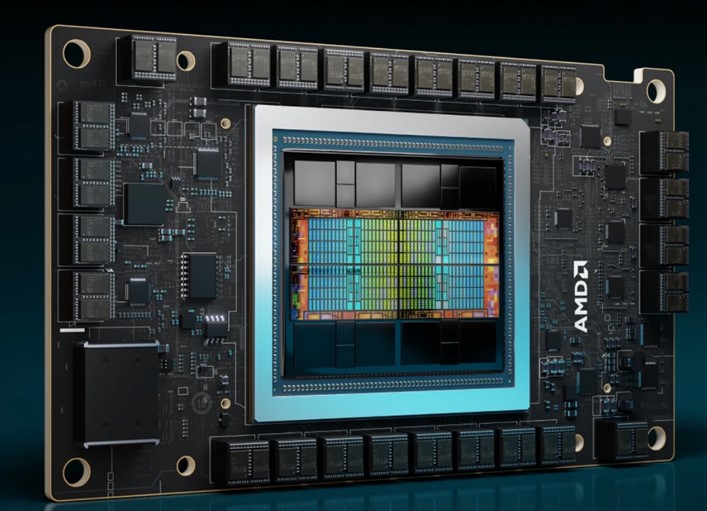

Designed for demanding generative AI workloads and HPC applications, the AMD Instinct MI300 series include:

- MI300X accelerators—Use a dozen 5nm and 6nm chiplets and connect eight stacks of HMB3.

- MI300A APUs—Combine CPU cores and GPUs (Instinct accelerators and Epyc processors) with shared memory in a single package, in what is the first APU accelerator for AI and HPC.

- MI300X platform—Based on the fourth-generation Infinity architecture, the ready-to-deploy platform integrates eight fully connected MI300X GPU OAM modules for 1.5TB HBM3 total memory, within existing infrastructure.

The MI300 series are built on the AMD CDNA 3 chiplet architecture and feature AMD Matrix Core technologies for heightened computational throughput. They offer large memory density and high-bandwidth memory, as well as boosted compute performance.

Other MI300 series features include:

- Support for INT8, FP8, BF16, FP16, TF32, FP32, and FP64, as well as sparse matrix data

- HBM3 dedicated memory

- Infinity cache

- Infinity Fabric technology

- Enhanced I/O with PCIe 5 compatibility

- Support for specialized data formats

| Instinct MI300A APU | Instinct MI300X accelerator | |

| Form factor | Servers | Servers |

| GPU | CDNA3 | CDNA3 |

| Lithography | TSMC 5nm/6nm FinFET | TSMC 5nm/6nm FinFET |

| Stream processors | 14,592 | 19,456 |

| Matrix Cores | 912 | 1,216 |

| Compute units | 228 | 304 |

| Peak engine clock | 2,100MHz | NA |

| CPU Epyc architecture | Zen 4 | NA |

| CPU cores | 24 x86 | NA |

| CPU peak engine clock | 3,700MHz | NA |

| TDP | 550W (760W peak) | 750W peak |

| Dedicated memory | 128GB unified HBM3 | 192GB HBM3 |

| Bus type: | PCIe 5.0 x16 | PCIe 5.0 x16 |

| Infinity Fabric links | 8 | 8 |

| Peak theoretical memory bandwidth | 5.3TB/s | 5.3TB/s |

| Peak Infinity Fabric link bandwidth | 128GB/s | 128GB/s |

| Cooling | Passive & active | Passive OAM |

With a 5.3TB/s PCIe Gen 5 (128GB/s) bus, the MI300X platform has a total aggregate bi-directional I/O bandwidth of 896GB/s. In terms of HPC performance, it offers a total theoretical peak double-precision Matrix (FP64), single-precision Matrix (FP32), and single-precision (FP32) performance of 1.3 PFLOPS. At double-precision (FP32), it offers 653.6 TFLOPS.

Furthering its AI agenda, AMD introduced its ROCm 6 open software stack, which includes programming models, tools, drivers, compilers, libraries, and more for AI models and HPC workloads using Instinct accelerators. The software is optimized to achieve top HPC and AI performance from the MI300 accelerators and enables users to develop, test, and deploy applications in an open-source, integrated software ecosystem.

Partners in AI

In an effort to advance AI PCs, the company also launched the new Ryzen 8040 series mobile processors delivering AI compute capability, along with Ryzen AI 1.0 software for making it easier for users to build and deploy machine learning models on their AI PCs. Through AMD’s partnership with Microsoft, the processors will be able to fully leverage the Windows 11 ecosystem. In Q1 2024, AI PCs with AMD Ryzen 8040 processors will begin shipping from OEMs including Acer, Asus, Dell, HP, Razer, and Lenovo. New Ryzen AI software is now available, which includes tools and runtime libraries for optimizing and deploying AI inference on Ryzen AI-powered PCs.

The processors have AMD RDNA 3 architecture-based Radeon graphics and select systems are powered by the AMD XDNA architecture built for AMD Ryzen AI, giving creative professionals, gamers, and mainstream users a powerful laptop that can run advanced AI experiences. Looking ahead to 2024, AMD’s next-gen Strix Point CPUs will include the AMD XDNA 2 architecture, which, says AMD, is designed to deliver more than a 3× increase in AI compute performance compared to the prior generation.

During Su’s keynote, she introduced various industry partners including Dell, HPE, Lenovo, Microsoft, Oracle Cloud, and others that are using AMD AI hardware and software.

On the supercomputing side, AMD’s Instinct MI300A APUs will be powering blades in the HPE Cray Supercomputing EX255a and in France’s GENCI Adastra supercomputer. Eviden is also working on an MI300A-powered blade for its BullSequana XH3000 DLC supercomputer line, and in the first half of 2024, the company will deliver its first AMD Instinct MI300A-based supercomputer to the Max Planck Data Facility in Germany.

AI Alliance

With a focus on fostering an open community to accelerate responsible innovation in AI, AMD has joined forces with IBM, Meta, and others to launch the AI Alliance. The AI Alliance is a community of technology creators, developers, and adopters collaborating to advance safe, responsible AI rooted in open innovation. According to its website, the alliance’s mission is “focused on accelerating and disseminating open innovation across the AI technology landscape to improve foundational capabilities, safety, security, and trust in AI, and to responsibly maximize benefits to people and society everywhere.” This will entail developing and deploying standards and a catalog of vetted tools, working toward responsibly advancing an ecosystem of open foundation models, and supporting the development of AI skills. The alliance will also advocate for open AI by educating the public and policymakers on the benefits, risks, and solutions related to AI.

The AI Alliance will start by forming working groups and a governing board, along with technical oversight committees.