Ahead of Computex and the Intel Tech Tour (ITT) that precedes it, Intel revealed more about its client road map—the one that runs from Meteor Lake (first AI PC, 7 million shipped to date) through Lunar Lake (next-gen AI PC, due Q3’24, production wafers underway) to Arrow Lake (scaling AI PC from mobile to desktop, due Q4’24).

Intel claims to be leading the AI PC transition with the largest install base and expectation of shipping over 100 million Intel AI PCs with XPU acceleration through 2025. Also, there’s some interesting ecosystem around AI-enhanced security and LLM support that will be announced.

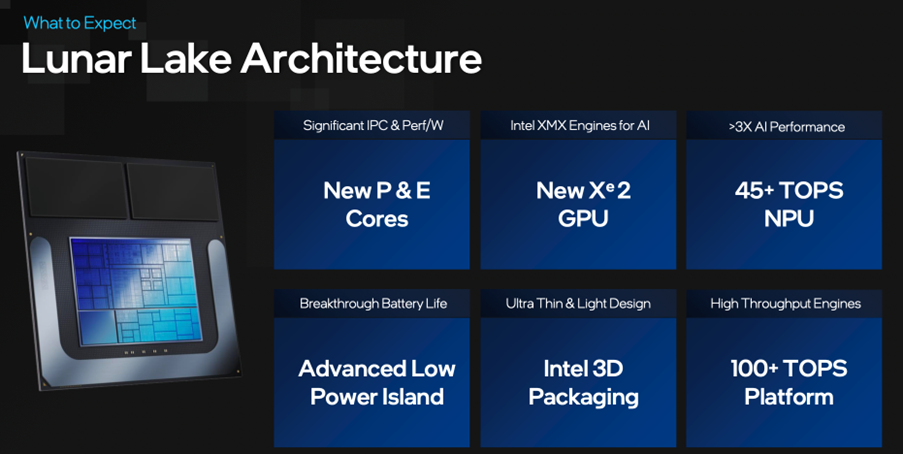

Lunar Lake is the next-gen platform with new P (performance) and E (efficiency) x86 cores and a new Xe2 GPU that Intel claims can deliver 100-plus TOPS at the platform level: 60 TOPS come from the new GPU, with Intel XMX acceleration, and 45 TOPS come from an enhanced NPU. XMX is an interesting part of Arc’s Xe high-performance graphics architecture, since it is a matrix multiplication acceleration engine. Intel has previously said that XMX AI engines have 16 times the compute capabilities to complete AI inferencing operations when compared to traditional GPU vector units.

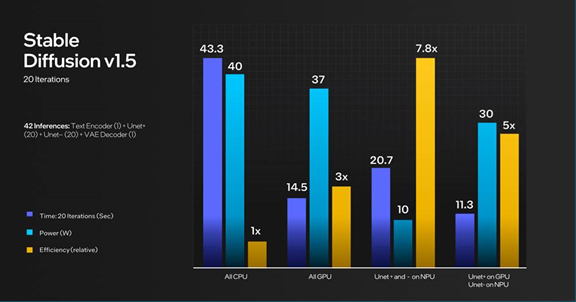

Comparison of performance of various AI processors. (Source: Intel)

Intel says, “All AI TOPS are quoted as Int8, so comparisons across XPUs (CPU, GPU, NPU) are valid from that point of view. Some workloads, such as the Stable Diffusion demo used for the data below, can be split across different XPUs—in this case, the +ve inferencing done on the GPU and the -ve inferencing on the NPU. In other scenarios, you might want to run multiple workloads, in which case you can do that.

The company points out that some AI workloads will be a better fit for the NPU, some will be a better fit for the GPU, and some will be able to leverage both. For example, last year they demonstrated Stable Diffusion 1.5 running on MTL using both AI engines concurrently—the GPU for positive prompts and the NPU for negative prompts. Having two AI engines will also be useful to avoid disrupting copilot/next-gen PC experiences while executing a compute-intensive AI workload.

Both the NPU and the GPU support different data types with different throughput, says Intel. For client Xe1 and Xe2 IPs, XMX with Int8 offers 16× the compute density of baseline FP32 vector ops, 4× by leveraging 32-bit data paths and designing ALUs to pack 4× 8-bit ops, and an extra 4× with XMX configured as a four-deep systolic array.

Intel will deep dive into the 2nd Gen Xe Core with XMX and the new NPU at ITT.

Intel claims 1.4× AI performance, 1.5× GFX performance—and they are yet to disclose CPU core performance, saying only that it will be faster than Ryzen 7 8840U and Snapdragon X Elite.

There’s much more to come between now and Pat Gelsinger’s keynote at Computex on June 4, and we will be in Taiwan next week at ITT to dig into the details with Intel’s team.