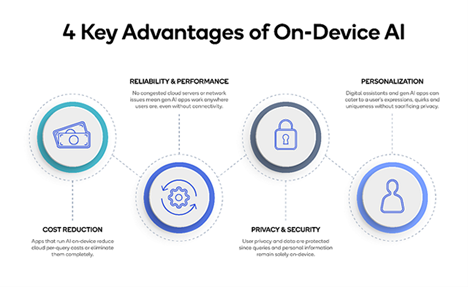

Qualcomm plans to offer Llama 2-based AI implementations on flagship smartphones and PCs starting in 2024. This will enable developers to create generative AI applications using Snapdragon platforms. On-device AI implementation provides benefits such as increased user privacy, improved application reliability, personalized experiences, and lower costs compared to cloud-based AI services.

Qualcomm Technologies and Meta say they have collaborated to optimize the performance of Meta’s Llama 2 large language models for use on devices locally, eliminating the need for reliance on cloud services. This advancement enables the execution of generative AI models, like Llama 2, on various devices such as smartphones, PCs, VR/AR headsets, and vehicles. This approach offers several advantages, including cost savings on cloud services, enhanced privacy, improved reliability, and personalized experiences for users.

With the aim of facilitating the creation of AI applications, Qualcomm plans to provide on-device Llama 2-based AI implementations. This will allow developers to create various use cases, including intelligent virtual assistants, productivity tools, content creation applications, entertainment, and more. These on-device AI experiences, powered by Snapdragon, can, says Qualcomm, even function in areas with no Internet connectivity or airplane mode.

Meta and Qualcomm have a history of collaboration, most notably in VR HMDs. Their current joint efforts support the Llama ecosystem, which includes research and product engineering efforts. Qualcomm says their presence in on-device AI uniquely positions it to support the Llama ecosystem, given its vast footprint at the edge with billions of smartphones, vehicles, XR headsets and glasses, PCs, IoT devices, and more powered by its AI hardware and software solutions, enabling the scaling of generative AI.

The availability of Llama 2-based AI implementation on Snapdragon-powered devices is set to begin in 2024. Developers can start optimizing applications for on-device AI using the Qualcomm AI Stack, a dedicated set of tools that enhance AI processing efficiency on Snapdragon, making on-device AI feasible even in small, thin, and light devices. Those interested can subscribe to their monthly developer newsletter for updates.