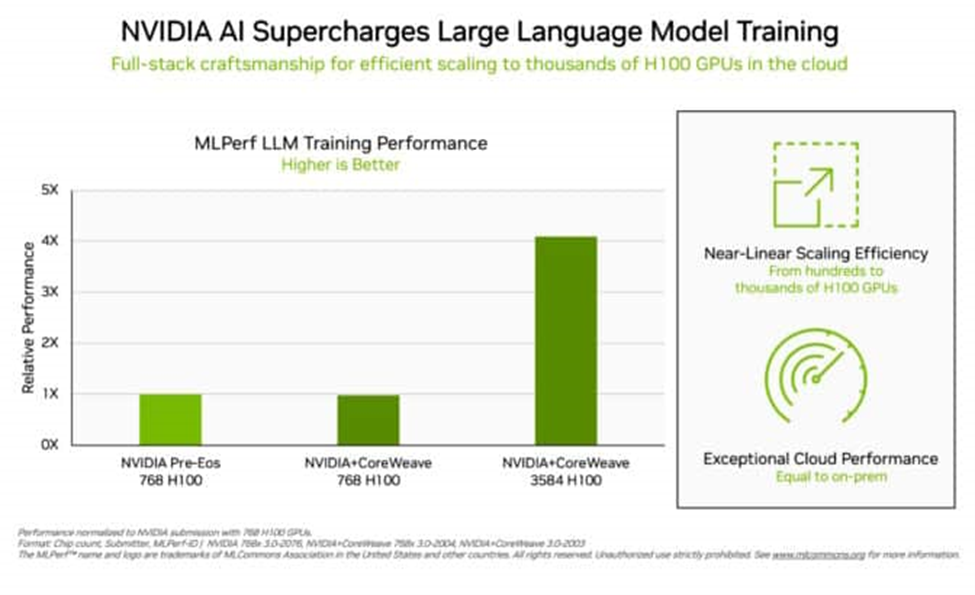

Nvidia’s H100 Tensor Core GPUs have gained recognition for their AI performance, particularly in large language models (LLMs) that power generative AI. The GPUs achieved impressive results in all eight MLPerf training benchmarks, including the new test for generative AI. A cluster of 3,584 H100 GPUs, developed by Inflection AI and operated by CoreWeave, completed a GPT-3-based training benchmark in under 11 minutes. Inflection AI used the H100 GPUs to create an advanced LLM for its personal AI assistant, Pi. By using Nvidia Quantum-2 InfiniBand networking, CoreWeave achieved similar performance to local data center setups.

According to Nvidia, users and industry-standard benchmarks agree about the AI performance of the company’s H100 Tensor Core GPUs, particularly in relation to the large language models (LLMs) that drive generative AI.

The H100 GPUs have achieved impressive results in all eight tests of the latest MLPerf training benchmarks, including the new MLPerf test designed for generative AI. Nvidia says the performance is demonstrated both at the individual accelerator level and at scale within massive server environments.

A cluster comprising 3,584 H100 GPUs was assembled by start-up Inflection AI and operated by CoreWeave, a specialized cloud service provider for GPU-accelerated workloads. In the tests, they ran an extensive GPT-3-based training benchmark in under 11 minutes.

CoreWeave’s co-founder and CTO Brian Venturo said their customers are leveraging CoreWeave’s fleet of H100 GPUs to construct generative AI and LLMs at scale.

Inflection AI said it has utilized the H100 GPUs to develop an advanced LLM that serves as the foundation for their first personal AI assistant, named Pi (personal intelligence). The company plans to function as an AI studio, creating personal AI systems that users can engage with using simple and intuitive methods.

CoreWeave claims they delivered similar performance from the cloud to what Nvidia achieved from an AI supercomputer running in a local data center. That, said Nvidia, is a testament to the low-latency networking of the Nvidia Quantum-2 InfiniBand networking that CoreWeave uses.

Mustafa Suleyman, CEO of Inflection AI, said anyone can experience the capabilities of a personal AI assistant based on his company’s large language model, which was trained using CoreWeave’s network of H100 GPUs.

Inflection AI was founded in early 2022 by Mustafa and Karén Simonyan from DeepMind, along with Reid Hoffman. The company said it is collaborating with CoreWeave to establish one of the world’s largest computing clusters utilizing Nvidia GPUs.

Furthermore, Nvidia noted that data centers accelerated with Nvidia GPUs use fewer server nodes, so they use less rack space and energy. In addition, accelerated networking boosts efficiency and performance, and ongoing software optimizations bring X-factor gains on the same hardware.

For a deeper dive into the optimizations fueling Nvidia’s MLPerf performance and efficiency, click here.