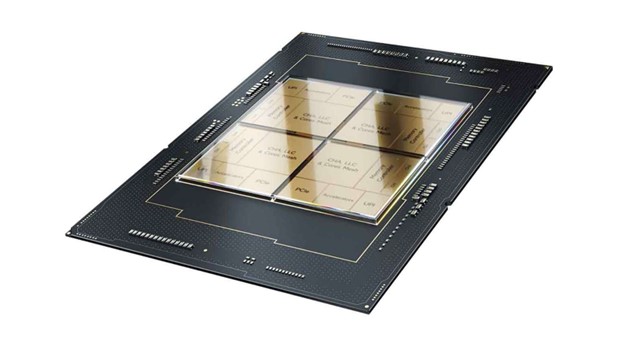

MLCommons published results of its industry AI performance benchmark, MLPerf Training 3.0, in which both the Habana Gaudi2 deep learning accelerator and the 4th Gen Intel Xeon Scalable processor delivered impressive training results.

The prevailing industry perception is that Nvidia GPUs are the sole option for running generative AI and large language models (LLMs). However, Intel says recent data reveals that its AI solutions offer competitive alternatives that break free from closed ecosystems, allowing for enhanced efficiency and scalability.

The company claims the latest MLPerf Training 3.0 results highlight the performance of Intel’s products across various deep learning models. The Gaudi2-based software and systems for training demonstrated maturity and scalability when applied to the large language model GPT-3. Gaudi2, one of the two semiconductor solutions submitting performance results to the LLM training benchmark for GPT-3, offers substantial cost advantages to customers in terms of server and system costs.

Moreover, says Intel, Gaudi2’s MLPerf-validated performance on GPT-3, computer vision, and natural language models, along with upcoming software advancements, positions it as a highly appealing alternative to Nvidia’s H100 in terms of price-performance.

On the CPU front, Intel claims the 4th Gen Xeon processors with Intel AI engines exhibited robust deep learning training performance. This, says the company, demonstrates that customers can utilize Xeon-based servers to create a single universal AI system for data preprocessing, model training, and deployment, thereby achieving optimal AI performance, efficiency, accuracy, and scalability.

MLPerf, generally regarded as the most reputable benchmark for AI performance, enables fair and repeatable performance comparison across solutions. Additionally, Intel has surpassed the 100-submission milestone and remains the only vendor to submit public CPU results with industry-standard deep learning ecosystem software.

These results, says Intel, also highlight the scaling efficiency possible using Intel Ethernet 800 series network adapters that utilize the open-source Intel Ethernet Fabric Suite Software based on Intel oneAPI.

Test result data can be found here.