The highly anticipated Turing GPU-based Nvidia RTC 2080-series AIBs were announced in late August at Gamecon 2018 and the top of the lines cards were promised for delivery in late September. They came out the week of the 17th, and everyone who could get their hands on one tested and compared them to the previous generation, the Pascal GPU-based 1080-series.

|

|

Nvidia’s new RTX 2080 Ti AIB, with an additional USB-c connector on the backplate. (Source: JPR) |

We ran 48 tests, on two platforms. Using a combination of synthetic and game benchmark tests, we found the average RTX score was 27% higher than a GTX and the average fps was 31% higher than the previous generation. That seems pretty reasonable to us, given the specifications between generations.

However, the Turing GPU brings more than just a typical generational improvement, with the higher price.

|

| Comparison of prices of Pascal AIBs to Turing AIBs. (Source: EVGA) |

The Turing-based AIBs introduce advanced AI capabilities, and everyone’s favorite, ray tracing. We’ve written extensively about these developments in our article Nvidia’s Turing demonstrates extensibility.

With these new features and capabilities comes the challenge of how to measure them (Underwriters Laboratories, UL, is already working on a raytracing benchmark). Once that’s figured out, then there is the problem of what to use to compare them. AMD will be introducing a raytracing AIB, and when they do that will be one comparative point. However, it’s going to take another generation to get enough data points to draw any real conclusions. That’s the good and bad news. It’s good news because we are entering into a new era in gaming and PC-based computer graphics. It’s bad news because we’re being asked to pay for technology that is currently dark silicon. It’s an old story about how the hardware vendors lead the software suppliers.

The new features bring the transistor count up to 18.6 billion. As a result, the RTX AIBs uses more power than the previous generation.

|

| Comparison of power consumption of RTX to GTX AIBs |

We compared the previous generation to the new AIBs and ran eight tests, two synthetic benchmark tests and four in-game benchmarks, on two platforms, a 16-core AMD 3.4 GHz Threadripper 1950X, and a 10-core 3.30 GHz Intel Core i9-7900X.

|

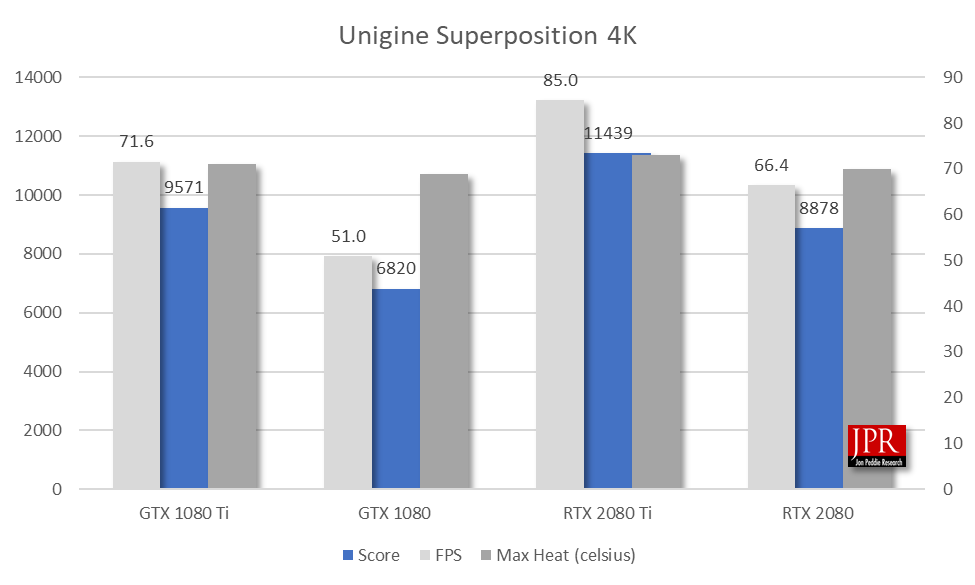

The synthetic tests were UL’s TimeSpy Extreme, and Unigine’s Superposition 4K. The games were Final Fantasy XV (4K highest), Shadow of Tomb Raider (4K highest SMAAT2x), Hitman 47 4K Ultra, and Deus EX ME 4K Ultra.

We took the average of all the fps scores, and the average of all the test scores to arrive at the performance score for each AIB. Then, applying the price and rated power consumption calculated the Pmark for each board.

|

| Pmark results for eight tests on an Intel i9 |

|

| Pmark results for eight tests on an AMD Threadripper |

The average fps on the Threadripper was 37.1, and on the i9 it was 42.4 fps. We ran the i9 tests with HDR turned on using a BenQ EL2870U 28-inch 4K HDR gaming monitor.

TimeSpy

We used Underwriter Lab’s (formally Futuremark) TimeSpy because it is an industry standard test and one that can be easily used for comparison.

Futuremark has always told a story with their benchmarks, and in TimeSpy there are portions of the previous benchmarks showcased as shrunken museum exhibits. These exhibits come to life as the so-called Time Spy wanders the hall, giving a throwback to past demos.

In the story one sees a crystalline ivy entangled with the entire museum. In parts of the exhibit, there are deceased in orange hazmat suits demonstrating signs of a previous struggle. Meanwhile, the Time Spy examines the museum with a handheld time portal. Through the portal, she can view a bright and clean museum and view bustling air traffic outside. The benchmark does an excellent job of providing both eye candy for the newcomers and tributes for the enthusiasts watching the events unroll. It was released in mid-2016.

|

| UL TimeSpy screenshot at 4K |

TimeSpy is a DirectX 12 benchmark test for gaming PCs running Windows 10. With its pure DirectX 12 engine, built from the ground up to support new API features like asynchronous compute, explicit multi-adapter, and multi-threading.

|

| UL TimeSpy Extreme test results for RTX vs GTX AIBs on Threadripper |

TimeSpy is an ideal benchmark for testing the DirectX 12 performance of modern graphics AIBs.

Superposition

Unigine’s Superposition benchmark is an extreme performance and stability test for AIBs, power supply, cooling system. The benchmark, like others, tells a story. A lone professor performs dangerous experiments in an abandoned classroom, day in and day out. Obsessed with inventions and discoveries beyond the wildest dreams, he strives to prove his ideas.

Once you come to this place in the early morning, you would not meet him there. The eerie thing is a loud bang from the laboratory heard a few moments ago. What was that? You have the only chance to cast some light upon this incident by going deeply into the matter of quantum theory: thorough visual inspection of professor's records and instruments help to lift the veil on the mystery. The current version is 1.0 (2017).

|

| Unigine Superposition screenshot at 4k on Threadripper |

It is often used in overclocking modes while representing with real-life workloads while including interactive experiences in a beautiful, detailed environment.

|

| Unigine Superposition test results for RTX vs. GTX AIBs on Threadripper |

The benchmark uses the Unigine 2 game engine and offers GPU temperature and clock monitoring, as well as SSRTGI (Screen-Space Ray-Traced Global Illumination) dynamic lighting technology. The developer says there are over 900 interactive objects in the test.

As mentioned, we also used four games for testing, Deus EX ME, Hitman 47, and two new games, Final Fantasy XV (FF15), and Shadow of the Tomb Raider (SOTTR).

Final Fantasy XV (FF15)

Final Fantasy XV is the latest of an action role-playing video game developed and published by Square Enix as part of the long-running Final Fantasy series. The game features an open world environment and action-based battle system, incorporating quick-switching weapons, elemental magic, and other features such as vehicle travel and camping.

|

| Noctis Lucis Caelum, the burdened teenage hero of Final Fantasy XV |

Final Fantasy XV takes place on the fictional world of Eos; aside from the kingdom of Lucis, all the world is dominated by the empire of Niflheim, who seek control of the magical Crystal protected by Lucis's royal family. On the eve of peace negotiations, Niflheim attacks the capital of Lucis and steals the Crystal. Noctis Lucis Caelum, the heir to the Lucian throne, goes on a quest to rescue the Crystal and defeat Niflheim. He later learns his full role as the True King destined to use the Crystal's powers to save Eos from plunging into eternal darkness. It was released for Windows in 2018.

|

| Square Enix FF15 test results for RTX vs. GTX AIBs on Threadripper |

Luminous Studio is a multi-platform game engine developed and used internally by Square Enix. The concept for the new engine was born in 2011 while the studio was in the final stages of working on Final Fantasy XIII-2. The game uses lighting technology from Luminous along with a purpose-built proprietary gameplay engine, Luminous 1.4.

With Luminous Studio, realtime scenes in XV have five million polygons per frame, with character models made up of about 100,000 polygons each. Character models for XV were constructed with 600 bones, estimated as roughly 10–12 times more than seventh generation hardware. About 150 bones are used for the face, 300 for the hair and clothes, and 150 for the body. For the characters hair, the inner hair for each character uses about 20,000 polygons, five times more than seventh-generation hardware. The data capacity for textures is also much more significant than before. Each character uses 30 MB of texture data, and ten levels of detail ‘whereas seventh-generation games used 50 to 100 MB of texture data for a scene.

Shadow of the Tomb Raider

Shadow of the Tomb Raider is an action-adventure video game developed by Eidos-Montréal in conjunction with Crystal Dynamics and published by Square Enix. It continues the narrative from the 2013 game Tomb Raider and its sequel Rise of the Tomb Raider. SOTTR is the 12th mainline entry in the Tomb Raider series and rumored to be the last, from Square Enix. The Windows version was developed by Nixxes Software, who had worked on several earlier Tomb Raider games for the platform.

|

| SOTTR is using ray-tracing capabilities in the RTX AIBs |

Nvidia worked with Square Enix, Eidos-Montréal, Crystal Dynamics, and Nixxes to enhance Shadow of the Tomb Raider. The development included the addition of Nvidia’s RTX ray-traced Shadows in SOTTR. The shadows offer what Nvidia is describing as a-hyper-realistic representations of the shadows we see in real-life, with support for substantial, complex interactions, self-shadowing, translucent shadowing, and a litany of other techniques, at a level of detail far beyond that has previously been seen. The game was released 14 September 2018.

|

| SOTTR test results for RTX vs. GTX AIBs on Threadripper |

Shadow of the Tomb Raider is built using the Foundation engine as a basis. The Foundation engine is the same engine as Rise of the Tomb Raider, but it was dramatically enhanced by the Eidos-Montréal R&D department and pushed to its limit during game development.

HDR effects and impact

HDR is slowly making its way into games, with maybe Hitman 47 being one of the first in 2016, before there really any support for it.

Windows 10 Creator edition released in April 2017 introduced HDR capability, but it wasn’t fully realized and the company received a lot of criticism over it. The April 2018 release fixed a lot of the issues. For external displays, the system and display both need to support HDMI 2.0 or DisplayPort 1.4, as well as HDR10. The AIB must support PlayReady 3.0 hardware DROM for protected HDR content.

The AIB recognizes if HDR can be supported by reading the monitor's EDID/DID. This capability is passed up to the OS(Win10RS2/2+), and a radio button from MS display's properties UI is exposed to let the end-user turn on/off HDR. It happens at runtime initially. But if the monitor is later switched after boot-up, that fits into the regular hot-plug scenario, where a hot-plug detect causes swap of EDID/DID.

The Windows 10 April 2018 Update also added a new option in the form of a “Change brightness for SDR content” slider that doesn’t affect HDR content.

HDR support is becoming increasingly common in monitors. HDR display does have some performance cost because the final render output and display fetch of that output has to go to FP16 format (i.e. double the DRAM bandwidth cost of legacy 8-bit). However, the performance cost is relatively low, especially on Turing where the display has a native FP16 data-path with dedicated tone mapping hardware, so it makes sense that people will likely use HDR to get the benefit of the added dynamic range and color fidelity.

We tested the games and benchmarks with HDR on and off and couldn’t see very much, if any difference in performance, so it’s what could be considered a free feature. However, there was a pretty big difference between the GTX and RTX with HDR on.

What do we think?

As mentioned above, we ran 48 tests on the AIBs in two platforms and the results were quite consistent, which is revealed by looking at the various charts. We did not do any overclocking.

The RTX beat the previous generation GTX AIBs in performance. The GTX 1080 got the highest Pmark score due to its lower power consumption and price.

The RTX AIBs are very expensive, costing more than a new i5 PC. The features they bring will be really exciting once the games catch up. For example, the new RTX AIBs have a new AI-based anti-aliasing technique called DLSS (deep learning supersampling). It uses a general model and also models that are trained for a specific game. However, it’s not available…yet. DLSS is not currently supported on FO4, FF15, ROTTR, and other games. Nvidia is working on adding DLSS to more games, and the latest list has 25 DLSS-enabled games on the way. So, should you wait until the game catches up to get a new RTX AIB? Probably not. Why not? Because you will get a performance improvement. And the chicken-and-egg issue with game developers still exists—they won’t develop unless there is an installed base to sell to. So, if you want to see great new games with great new features, you have to seed the field to attract the game crows.

When people ask us what graphics board or PC should they buy, we always say, whatever is the most money you can afford, add $100 and then buy whatever you can with that budget—PCs and PC graphics are a real value for money deal. So, if you can afford an RTX, we recommend you get one. The upside with the added new features and the longevity of the card will make it worth it.