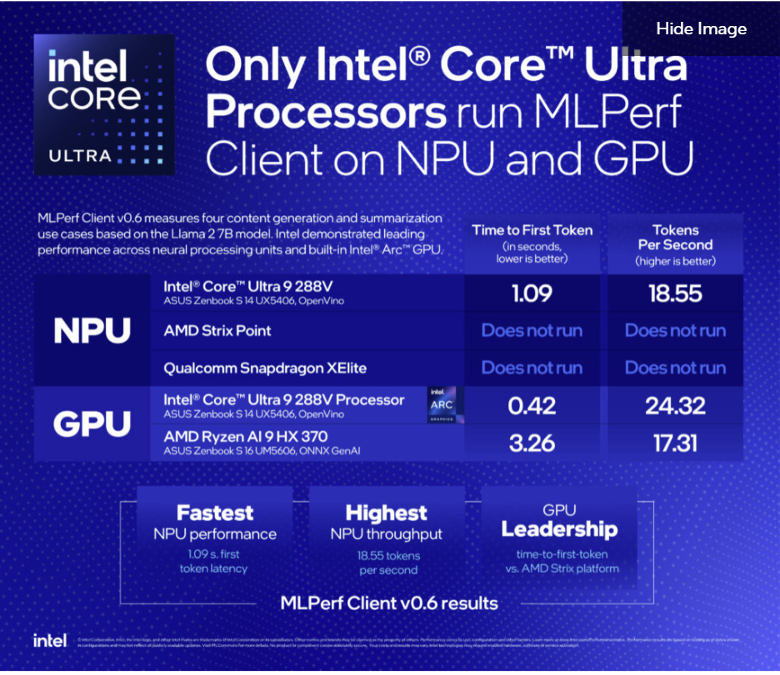

Intel is the first company to achieve full NPU compliance in the MLPerf Client v0.6 benchmark, which now includes standardized tests for large language model (LLM) performance on client NPUs. Intel’s Core Ultra Series 2 processors showed strong results, generating the first token in 1.09 seconds and reaching 18.55 tokens per second. Developed by MLCommons with industry partners, the benchmark highlights Intel’s hardware and software optimization. Intel’s new desktop and mobile processors support broader deployment of client-side AI applications.

Intel has announced full neural processing unit (NPU) support in the MLPerf Client v0.6 benchmark, making it the first vendor to achieve compliance across all test categories. This benchmark release includes the first standardized evaluation of large language model (LLM) performance on client NPUs. Intel’s results demonstrate that its Intel Core Ultra Series 2 processors can generate language model outputs using both the graphics processing unit (GPU) and the NPU at rates exceeding typical human reading speed.

MLPerf Client v0.6 evaluates performance across four content generation and summarization tasks using the Llama-2-7b model. Intel’s submissions indicate that its NPU is capable of generating the first token in 1.09 seconds and achieves a throughput of 18.55 tokens per second. These results represent the highest NPU throughput and lowest latency measured in the benchmark to date. The integrated Intel Arc GPU also showed strong performance, with faster time-to-first-token relative to other vendors’ results.

The MLPerf Client v0.6 benchmark was developed by MLCommons in collaboration with major industry stakeholders including Intel, AMD, Microsoft, Nvidia, and Qualcomm. The latest release expands the scope of MLPerf’s evaluation methodology from GPU-based inference to include NPU-specific workloads. Intel’s compliance was achieved through joint optimization efforts between its NPU hardware teams and the OpenVINO software stack.

On October 10, 2024, Intel launched its Intel Core Ultra 200S desktop processors (codename Arrow Lake-S), introducing desktop systems designed for AI workloads. These processors complement the mobile Intel Core Ultra 200V processors (code-name Lunar Lake), expanding the deployment of client-side AI compute capabilities. The new hardware platforms are supported by a software ecosystem that includes optimized AI models and partnerships with independent software vendors to enable application-level AI features.

LIKE WHAT YOU’RE READING? INTRODUCE US TO YOUR FRIENDS AND COLLEAGUES.