In a keynote during Siggraph 2023, Nvidia CEO Jensen Huang revealed some of the company’s latest work that is enabling enterprises to engage more easily with generative AI. He sees generative AI eventually becoming a standard in the way computing is done in the future. Huang announced a pair of developments in this regard. The first concerns a partnership between Nvidia and Hugging Face that will connect a plethora of developers to Nvidia’s generative AI supercomputing resources. The other is the unveiling of Nvidia AI Workbench, a tool kit that hastens the adoption of custom generative AI for enterprises. It also opens up the ability to run acceleration libraries on PCs and workstations, as well as on enterprise data center, public cloud, and Nvidia DGX Cloud.

What do we think? Obviously, Nvidia is not the sole company working to make it easier and faster for developers to create generative AI. But, they seem to be working at light speed on solutions and to make generative AI easier for everyone to use. With new or upgraded hardware and software every few months, as well as important partnerships, Nvidia is making it possible for just about every company to include the technology in their business. At least that is the goal. We soon might reach a tipping point where those that do not take advantage of this push toward generative AI are likely to find themselves at a large, eventually insurmountable, competitive disadvantage at best, or even left out in the cold and unable to compete, at worst.

Making a smooth path toward generative AI adoption for all

If you thought that generative AI was a just a term and not really a thing yet, think again. At Siggraph 2023, the technology—which was barely whispered about at the conference a year ago by some very few—was the big buzzword just one year later. Many companies at the conference—big and small, and from all walks of the industry—either demonstrated generative AI capabilities or discussed their plans to implement it into their products and workflows. And Nvidia now has even more developer tools to assist them in their efforts.

During Nvidia CEO Jensen Huang’s keynote address at the conference, he commented that generative AI models have been making tremendous breakthroughs. “We all want to do this. There are millions of developers, designers, and artists around the world and there are millions of companies that would like to take advantage [of it], and everyone at Nvidia is working hard to use large language models and generative AI in our work.” In fact, he credits generative AI with helping them to design the powerful Hopper GPU. To make the journey to generative AI, the first thing a company needs to do, he said, is find a model that works for them and fine-tune it for their curated data. Secondly, they need to augment their engineers and developers with the capability of these generative models.

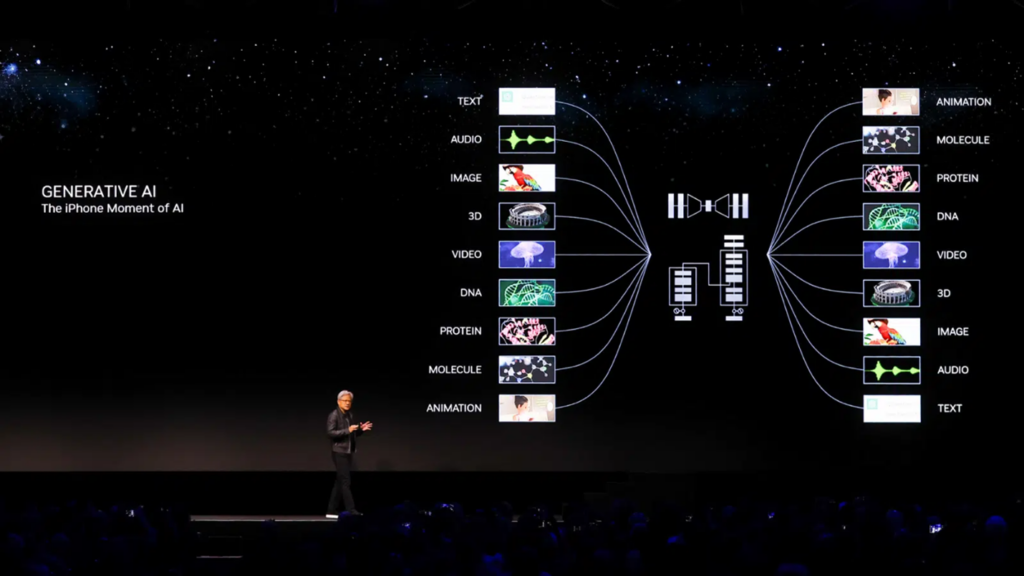

Nvidia wants people to have the ability not just to do generative AI in the cloud, but it also wants to extend their AI journeys beyond the cloud to everywhere. “We want to run this in the cloud and continue to run it in the cloud, but as you know, computing is done literally everywhere. AI is not some widget that has a particular capability; AI is the way software is going to be done in the future and the way computing will be done in the future,” said Huang. “It will be literally in every application and will be run in every single data center and will run in every single computer—at the edge and in the cloud.”

There’s a reason the world’s AI is done largely in the cloud today, Huang said—the software stack is really complicated. Just getting them to run on a particular device and system is incredibly hard. “In order for us to democratize this capability, we have to make it available everywhere,” he said. That requires enabling those really complicated, unified, optimized stacks to run on almost any device and make it possible for users to engage with AI, he added.

At the conference, Nvidia made two announcements aimed at making this vision a reality.

Hugging Face customers get easy access to DGX Cloud

The first involves the integration of Nvidia DGX Cloud into the Hugging Face platform. Hugging Face is the largest AI community in the world, used by 15,000 organizations and has shared more than 250,000 models and 50,000 datasets. This partnership will give those developers access to Nvidia DGX Cloud AI supercomputing for building large language models (LLMs) and other advanced AI applications, as well as training the models and fine-tuning them using open-source resources. Not only will this access make those processes faster for developers, but will also make it easier for them to customize industry-specific applications.

Each instance of DGX Cloud has eight Nvidia H100 or A100 80GB Tensor core GPUs for a total of 640GB of GPU memory per node. Nvidia is connecting the Hugging Face community with its AI computing platform via leading cloud service providers like Microsoft Azure, Oracle Cloud Infrastructure, and Google Cloud.

“Nvidia DGX Cloud is just a click away for the Hugging Face community,” Huang said, as DGX Cloud will connect these users to the necessary infrastructure and software for their LLM development. Meanwhile, Hugging Face will offer in the coming months a new service called Training Cluster as a Service, powered by DGX Cloud, that simplifies the creation of new and custom generative AI models for enterprises. Using Training Cluster as a Service, companies will be able to select from among the available models for training or even leverage their own data to create brand-new models.

AI Workbench tools bring AI capability to the edge

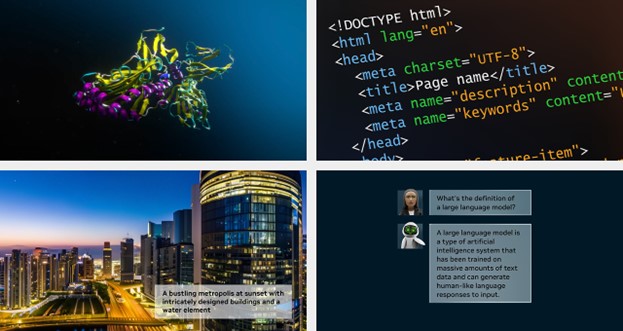

The second announcement was the introduction of Nvidia AI Workbench, a collection of tools for simplifying AI setup and LLM tuning and deployment, thereby enabling developers to quickly create, test, and customize pretrained generative AI models on a PC or workstation; later, they can then be scaled to any data center, public cloud, or Nvidia DGX Cloud.

AI Workbench is accessed via a simple interface on the local system so developers can customize models from places like Hugging Face, GitHub, and Nvidia NGC (a portal of enterprise services, software, and support) with their own data. Nvidia noted that the models then can be easily shared across multiple platforms.

While an abundance of pretrained models is currently available, customization using available open-source tools can be cumbersome, as users must sift through online repositories for the appropriate framework, tools, and containers, said Nvidia, as well as possess the skills to customize the model for their specific usage. Instead, by using AI Workbench, developers can customize and run generative AI far easier with just a few clicks. With Workbench, they can collect the models, frameworks, developer kits, and libraries they need from open repositories as well as from the Nvidia AI platform into a unified developer tool kit.

Meanwhile, developers can use AI Workbench with the latest generation of multiple-GPU-supported high-end, AI-ready workstations from companies like Dell, HP Enterprise, HP Inc., Lambda, Lenovo, and Supermicro. Users with an RTX PC or workstation (Linux or Windows) can also perform, test, and tune enterprise-level generative AI projects on their local systems.

Nvidia augmented this endeavor, offering a new version of its AI Enterprise software platform (version 4.0) with tools to facilitate adoption of generative AI in the cloud, data center, and edge.