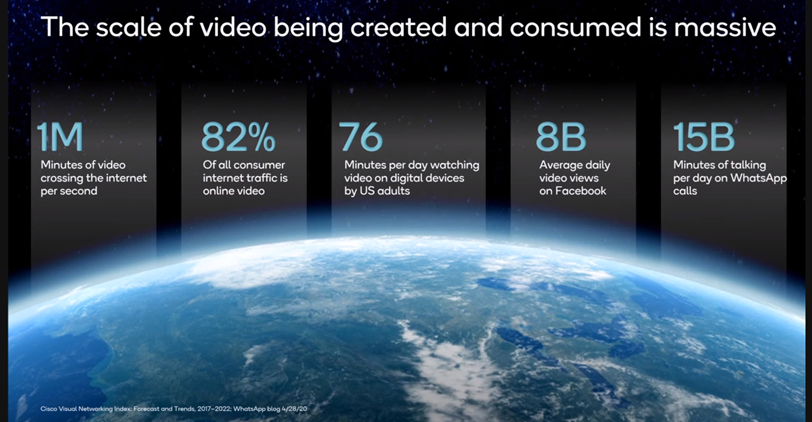

Qualcomm recently showed an AI demo of video encoding and decoding on a mobile device. It employed neural codecs, which offer versatility and customization for specific video requirements. Optimization for perceptual quality using generative AI advancements provide new modalities and compatibility with general-purpose AI hardware. Employing neural codecs on compute-constrained devices poses significant challenges. Qualcomm developed a neural interframe video compression architecture that enables on-device coding of 1080p videos.

An AI demo run by Qualcomm showed encoding and decoding of 1080p videos on a mobile device. Using neural codecs provided flexibility, enabling customization for specific video needs, optimization for perceptual quality using generative AI advancements, compatibility with various AI hardware, and the potential for expansion into new modalities. However, implementing neural codecs on devices with limited computational resources presents significant challenges.

To address the challenges of computational workloads in a mobile device, Qualcomm’s researchers developed an innovative and efficient neural interframe video compression architecture. The breakthrough offers the promise of enabling on-device coding of 1080p videos. The demo effectively demonstrates the neural video codec’s ability to faithfully preserve the intricate visual details and complex motions found in high-quality videos.

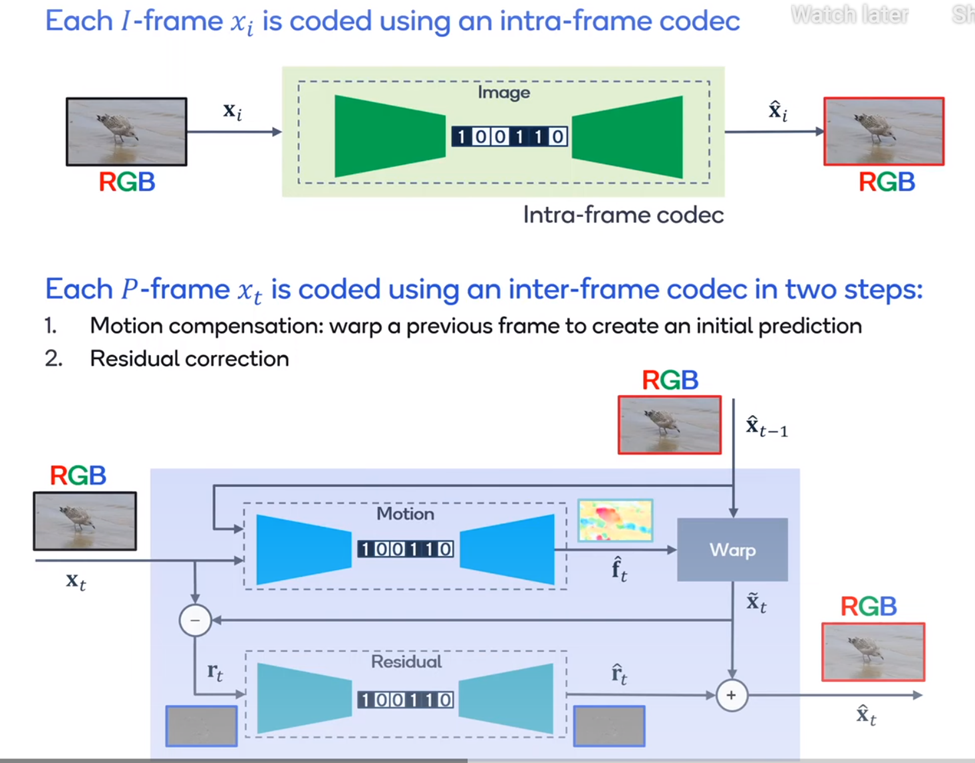

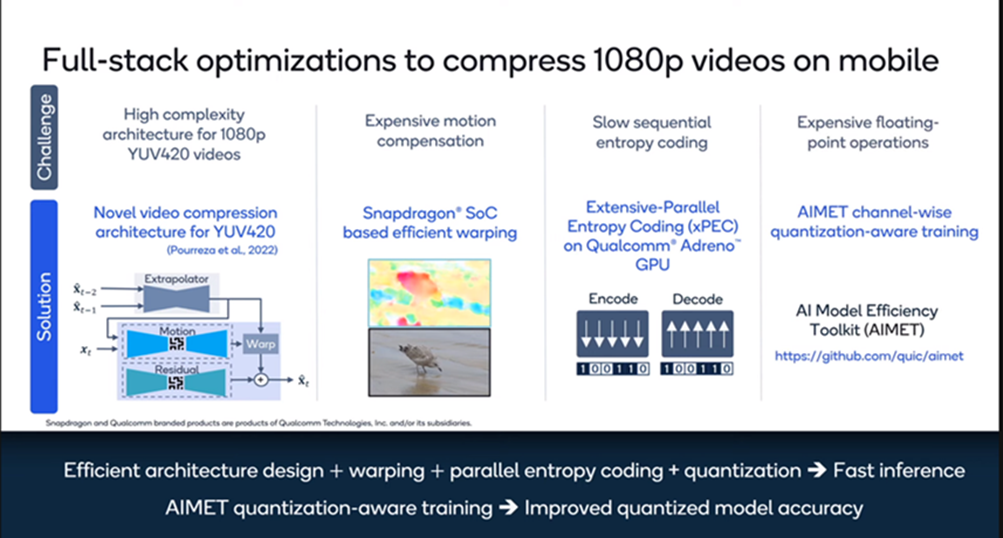

Existing neural video codecs are not designed for the practical YUV color space. They need expensive motion compensation methods and use a theoretical bit rate or slow entropy coding. They also use expensive (in terms of compute) floating-point operations. All of that is a major challenge to implementing them on a battery-constrained device like a smartphone.

Qualcomm has a different approach.

Qualcomm claims to have a full-stack approach to video encoding. The company designed a novel, efficient neural interframe video compression architecture for YUV420 video. Then they implemented on-device motion compensation using Snapdragon processors and developed a parallel entropy algorithm using multithread concurrency. They also improved inference efficiency by quantizing the network weave using their AI modeling tool kit’s quantization-aware training.

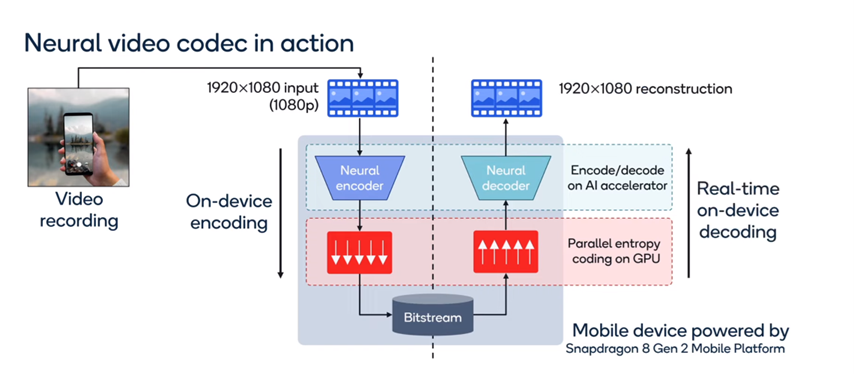

Here’s how the demo works: First, the user records a 1080p video on the smartphone. Qualcomm encodes the video using parallel entropy coding of the bitstream on the GPU. To play back the recorded video in real time, the bit stream is processed by entropy decoding, also on the GPU and the decoder network. And its’ all done on a Snapdragon 8 Gen 2 mobile device.

The result was the first (claims Qualcomm) demonstration of a real-time YUV420 neural codec on a mobile device at or greater than 30 FPS and 6Mb/s to 7Mb/s.

What do we think?

Qualcomm has been the leader in ultrahigh-resolution cameras in smartphones. Showing 1080p recording and playback in real time (i.e., >30 FPS) is just the beginning. There are lots more pixels where they came from, and the Snapdragon has several nifty processors to play AI and compression tricks with. We can expect more in this area from the company. Apple is going to be challenged to make the M2 sing and dance like this.