Imagination Technologies and MulticoreWare have enabled GPU compute on the Texas Instruments TDA4VM processor, leading to significant performance improvements in autonomous driving and ADAS. By running the StereoBM algorithm on the GPU, they achieved over 100× performance gains on a high-resolution image. This allows automotive customers to maximize performance per watt in sensor processing workloads. The collaboration demonstrates the potential of GPUs for compute and AI algorithms in automotive applications.

What do we think? Imagination has a long history in automotive, going back over two decades, and a solid customer base among the traditional auto semiconductor suppliers such as Texas Instruments. With the long design times and slow cadence of change in the auto segment, being able to deliver new compute performance on devices that have been in the market for a long while—like the Jacinto 7-based TI TDA4VM, which debuted in 2019—is a good thing.

Compute using IMG BXS GPU on the TI TDA4VM processor delivers 100× performance gains, MulticoreWare says

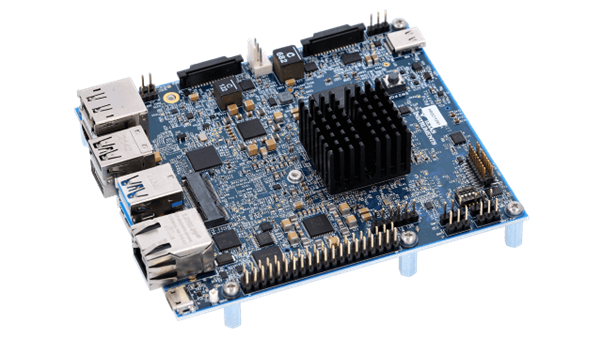

MulticoreWare Inc. and Imagination Technologies announced at CES 2024 that they have enabled GPU compute on the Texas Instruments TDA4VM processor, unleashing approximately 50 GFLOPS of extra compute and demonstrating a massive improvement in the performance of common workloads used for autonomous driving and advanced driver assistance systems (ADAS).

The partners claim over 100× performance gains when running a stereo block matching (StereoBM) algorithm on the GPU rather than on the CPU on a high-resolution (3200×2000) image. This enables automotive customers to use IMG BXS GPUs with OpenCL to achieve higher performance per watt on TDA4 SoCs for sensor processing workloads (camera, radar, and lidar), augmenting other compute accelerators existing in the platform.

At CES, Imagination Chief Product Officer James Chapman told us: “It supports our overall thrust of GPUs being a very, very good place to do some of these algorithms for compute and AI. So, we ported, as an example, a stereo block matching application where you’ve got two cameras, and you want to identify where are the objects. It’s a common thing that happens in ADAS systems, and it’s a good example of something that you can get to run fast on a GPU.”

MulticoreWare optimized the StereoBM algorithm to leverage the Imagination GPU cores more efficiently. The IMG BXS-4-64 GPU intrinsics (modeled camera data) and adaptive memory handling helped achieve optimal performance on higher-resolution camera data.

Chapman continued: “MulticoreWare realized we can run a lot of this from the GPU now. These GPUs are very capable, but I’m not sure the wider market thinks in those terms yet. They see that a GPU is needed for the surround view. The CPU is needed for this. The DSP is needed for that. And people get into that way of thinking about it. What this effort shows is we took something that was absolutely hammering the chip, loading up all the compute resources, and we got it to run with huge speedups from 20 to maybe even 100 times faster. The CPU workload was down at about 10%. Suddenly, you went from a device that was freaking out and almost falling over to hang on a minute, this device I’ve got in my system is now highly capable—orders of magnitude more.”